T4K Components

The section describes the architecture of the Trilio for Kubernetes product.

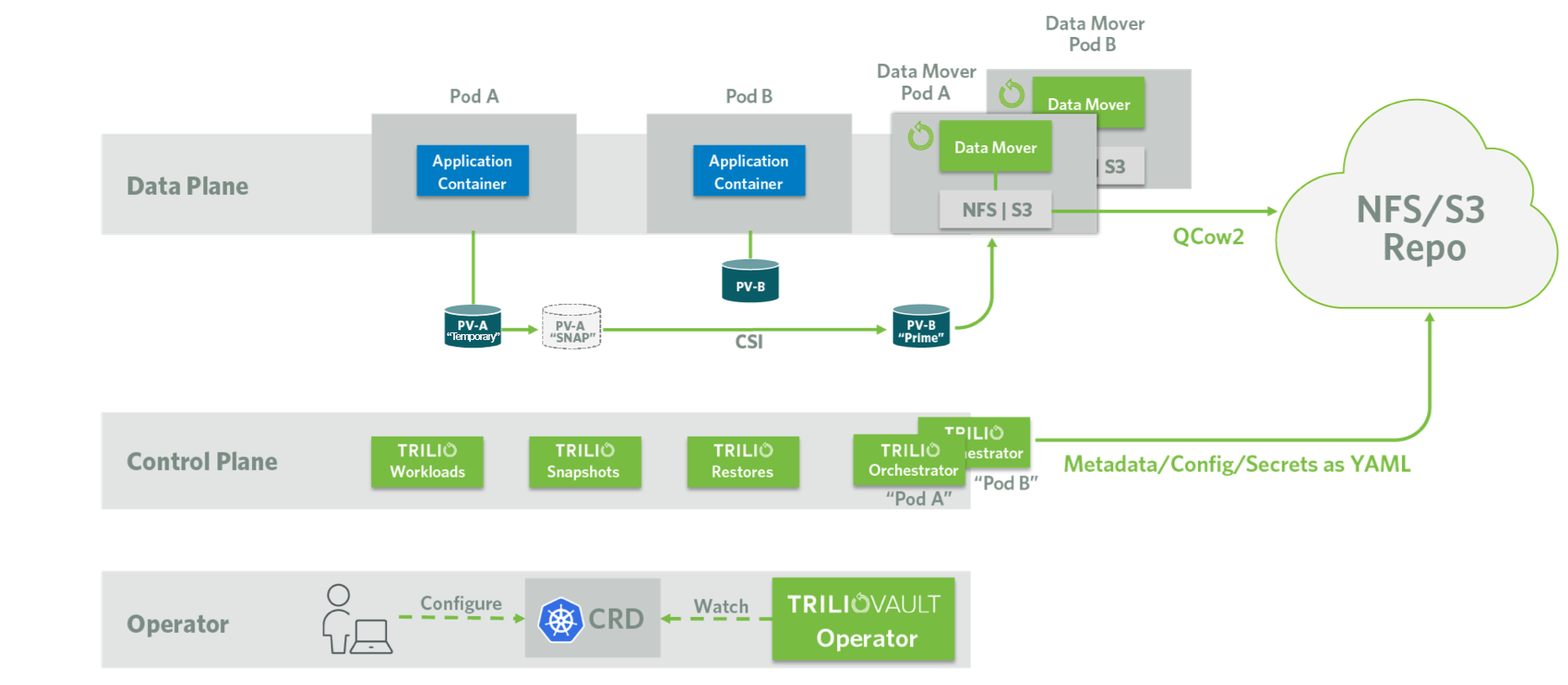

Trilio for Kubernetes is a cloud native application with an architecture that makes it Kubernetes native. Custom Resource Definition (CRD) makes it's API layer and etcd it's database. It's divided into two main components: Application and Operator. Application is the core part which handles backup, recovery through CRD's and controllers. Operator manages the lifecycle of the application. It takes care of application installation, upgrades, high availability, etc. The application layer is been kept decoupled with the operator, because of which our operator for Kubernetes can be easily replaced with the OLM(Operator Lifecycle Manager) framework of OpenShift.

Trilio Operator

Trilio for Kubernetes comes with it's own operator. It's a helm based operator. It is managed by a CRD called TrilioVaultManager. This operator takes care of the lifecycle of the application. The TrilioVaultManager contains configuration like scope io the application (Namespaced/Clustered), version of application, deployment namespace, etc. Operator accordingly will take care of creating, updating the application instance. Operator will take care of auto recovery in case one of the application components goes down.

Trilio for Kubernetes is also available via the Operator Lifecycle Manage (OLM) framework. It can be deployed from the embedded OperatorHub within RedHat OpenShift.

Trilio Application

TrilioVault consists of several Custom Resource Definitions (CRDs) and their associated controllers, which together form the core of the application. It also includes an admission webhook server, now integrated with the control plane, which handles the validation and mutation of CRD instances during creation, update, and deletion operations.

The controllers continuously reconcile based on events triggered by operations on these custom resources. These controllers, along with the admission webhook, are packaged together as part of the TrilioVault control plane.

To perform backup, restore, and other operations, the controllers dynamically launch multiple Kubernetes Job resources in parallel. These jobs handle the actual execution and are collectively referred to as the execution layer.

Control Plane

The Control Plane consists of various CRDs and their Controllers. Among them following are the four main controllers

Target Controller

Target CRD holds the information about a Target which is our data store to store the backups. Target controller reconciles on Target CRD. Reconciliation loop of the Target controller checks if the target is accessible and validates the access by performing sample CRUD operation. In case the validation is successful it markes the Target CR as available.

Only Target with Available state can be used for backup/restore.

BackupPlan Controller

BackupPlan CRD (including ClusterBackupPlan) defines the components to backup, their quiescing strategy, their automated backup schedule, their backup retention strategy and all the information needed for backup. The BackupPlan Controller is responsible for validating the backupplan and creating cronjob resources required to handle the automated backup operation

Backup Controller

Backup CRD (including ClusterBackup) represents a backup operation. The Backup Controller reconciles on the Backup CRD and executes the backup operation. It executes the backup operation by creating multiple Kubernetes jobs. It first creates a snapshotter job which handles collecting all the metadata, identifying data components from the metadata and uploading metadata to the target. It then triggers data snapshot through CSI for all the data components identified in the snapshot step. As the CSI snapshots completes it uploads the data to the target in the form of incremental QCOW2 images using datamover. Once the backup is complete it performs logic to squash backups as per the retention policy defined in the BackupPlan.

Snapshot Controller

Snapshot CRD (including ClusterSnapshot) represents a snapshot operation. The Snapshot Controller reconciles on the Snapshot CRD and executes the snapshot operation. The snapshot controller is very similar to backup controller in regards to the operations it performs via job with one key exception. The snapshot operation is meant to take on cluster backups of the data components of the applications. Similar to backup controller, it creates a snapshotter job which handles collecting all the metadata, identifying data components from the metadata and uploading metadata to the target. It then also triggers data snapshot through CSI for all the data components identified in the snapshot step. But unlike backup controller, no data upload happens for the CSI snapshots. These CSI snapshots exist on the cluster only that later serves as the backed up data components for faster restore. Once the snapshot is complete, similar to backup controller, it performs logic to retain snapshots as per the retention policy defined in the BackupPlan.

Restore Controller

Restore CRD (including ClusterRestore) represents a restore operation. The Restore Controller reconciles on the Restore CRD and executes the restore operation. Similar to backup controller, restore controller also executes restore logic by creating multiple Kubernetes jobs. It first validates if the restore can be performed by creating a validation job. Once the validation succeeds it starts data restore by creating datamover jobs in parallel. After successful restore of data, metadata is restored by metaprocessor job.

Webhook Server

Webhook server takes care of moderating the API requests on Trilio API group custom resources. It contains both the validation and mutation logic. Validation logic is mainly focused to check if the incoming input is correct. Mutation logic is used for defaulting and for deriving any data before the request goes to Kubernetes apiserver.

Ingress-Controller, Web, Dex, and Backend Deployments

A management console is supported and shipped with the product. There are several pods, namely Ingress-controller, web and backend that are deployed with the product. There are service and ingress objects related to these pods which along with the pods themselves support the management console for Trilio for Kubernetes.

Trilio leverages Dex to support OIDC and LDAP authentication protocols. A pod running Dex is provided as part of the control plane.

Data Plane

The Data Plane includes datamover Pods that are primarily responsible for transferring data between persistent volumes and the backup media. Trilio works with Persistent Volumes (PVs) using CSI interface.

Trilio does not support in-tree storage drivers or flex volumes. Our support starts with CSI snapshots alpha driver and hence a minimum of Kubernetes 1.12 is required. If you are using Kubernetes version 1.16 or less, you may need to enable alpha gate for CSI snapshot functionality.

The Control Plane identifies the physical volumes of the application, creates a snapshot of each PV, creates a new PV from the snapshot and spawns a datamover Pod for each PV it creates. If the application has 2 PV's, then Control Plane spawns 2 data mover Pods one for each PV to backup. The datamover specification also includes references to the backup target object where the backup data needs to be copied.

Trilio backup images are QCOW2 images. Every time a backup of an application is done, Trilio creates a QCOW2 image for each PV in the application. The datamover Pod uses a Linux based tool called qemu-img to convert data from PV to a QCOW2 image as part of the backup process. During the restore process, a datamover converts QCOW2 a image to PV.

Datamover Pods are created only for the purpose of backing or restoring a particular PV. Once the data transfer operation is done, they cease to exist. The number of datamover Pods at any time depends on the number of backup jobs and their applications PVs.

The Trilio datamover supports transferring data from/to either NFS or S3 compatible storage. The backup target is part of datamover spec. If the backup target is NFS, the share is mounted to the datamover and data transfer is initiated to the NFS share. S3 compatible storage is handled differently as S3 does not support POSIX compatible file system API calls. The S3 bucket is mounted through a FUSE plugin as a local file system mount point and the backup and restore operations are performed with respect to the FUSE mount point. You can read more on S3 fuse plugin implementations at S3 as Backup Target.