Getting Started with Trilio for AWS Elastic Kubernetes Service (EKS)

Learn how to install, license and test Trilio for Kubernetes (T4K) in the AWS Elastic Kubernetes Service (EKS) environment.

Table of Contents

What is Trilio for Kubernetes?

Trilio for Kubernetes is a cloud-native backup and restore application. Being a cloud-native application for Kubernetes, all operations are managed with CRDs (Customer Resource Definitions).

Trilio utilizes Control Plane and Data Plane controllers to carry out the backup and restore operations defined by the associated CRDs. When a CRD is created or modified the controller reconciles the definitions to the cluster.

Trilio gives you the power and flexibility to backup your entire cluster or select a specific namespace(s), label, Helm chart, or Operator as the scope for your backup operations.

In this tutorial, we'll show you how to install and test operation of Trilio for Kubernetes on your EKS deployment.

Prerequisites

Before installing Trilio for Kubernetes, please review the compatibility matrix to ensure Trilio can function smoothly in your Kubernetes environment.

Trilio for Kubernetes requires a compatible Container Storage Interface (CSI) driver that provides the Snapshot feature.

Check the Kubernetes CSI Developer Documentation to select a driver appropriate for your backend storage solution. See the selected CSI driver's documentation for details on the installation of the driver in your cluster.

Trilio will assume that the selected storage driver is a supported CSI driver when the volumesnapshotclass and storageclassare utilized.

Trilio for Kubernetes requires the following Custom Resource Definitions (CRD) to be installed on your cluster:VolumeSnapshot, VolumeSnapshotContent, and VolumeSnapshotClass.

Installing the Required VolumeSnapshot CRDs

Before attempting to install the VolumeSnapshot CRDs, it is important to confirm that the CRDs are not already present on the system.

To do this, run the following command:

If CRDs are already present, the output should be similar to the output displayed below. The second column displays the version of the CRD installed (v1 in this case). Ensure that it is the correct version required by the CSI driver being used.

Installing CRDs

Be sure to only install v1 version of VolumeSnapshot CRDs

Read the external-snapshotter GitHub project documentation. This is compatible with v1.22+.

Run the following commands to install directly, check the repo for the latest version:

For non-air-gapped environments, the following URLs must be accessed from your Kubernetes cluster.

Access to the S3 endpoint if the backup target happens to be S3

Access to application artifacts registry for image backup/restore

If the Kubernetes cluster's control plane and worker nodes are separated by a firewall, then the firewall must allow traffic on the following port(s)

9443

Verify Prerequisites with the Trilio Preflight Check

Make sure your cluster is ready to Install Trilio for Kubernetes by installing the Preflight Check Plugin and running the Trilio Preflight Check.

Trilio provides a preflight check tool that allows customers to validate their environment for Trilio installation.

The tool generates a report detailing all the requirements and whether they are met or not.

If you encounter any failures, please send the Preflight Check output to your Trilio Professional Services and Solutions Architect so we may assist you in satisfying any missing requirements before proceeding with the installation.

Installation Methods

There are two methods that can be used to install T4K on the AWS EKS cluster:

Install from the AWS Marketplace -

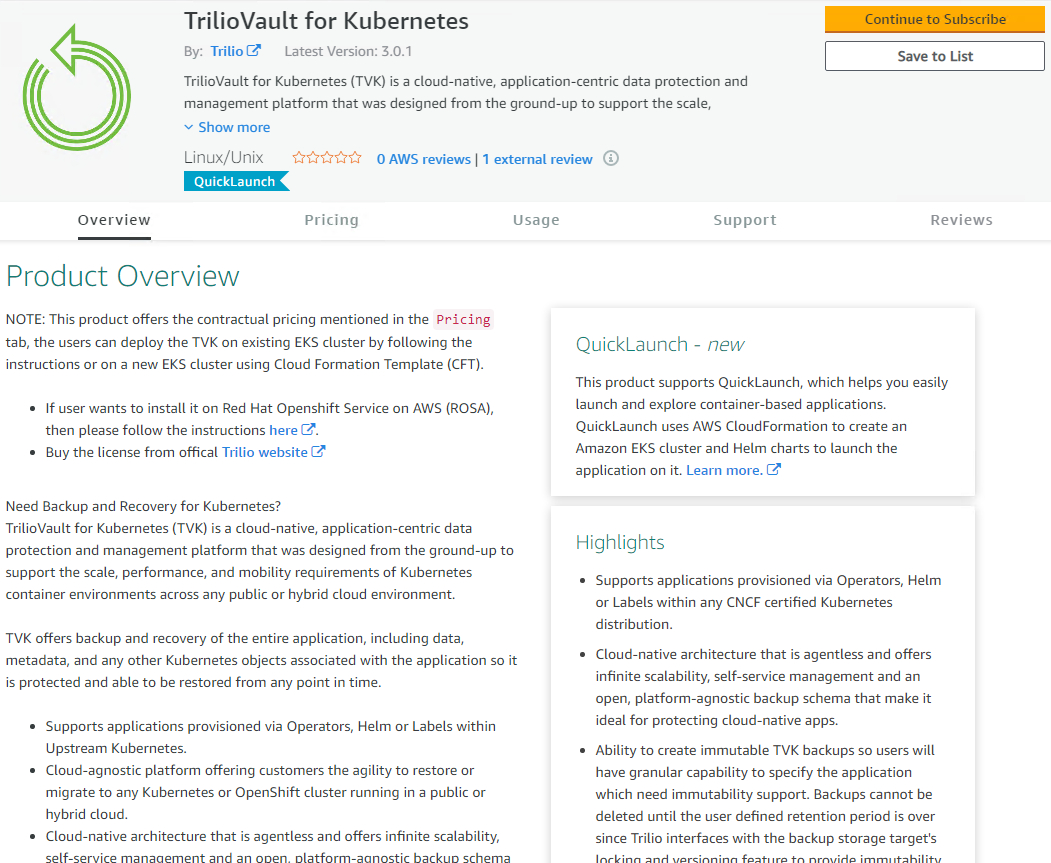

Trilio for the Kubernetes application is listed in the AWS Marketplace, where users can opt for a Long Term Subscription to the product.

Trilio for Kubernetes (Long-Term Contractual Pricing)

Install manually from the CLI -

Users can follow the exact installation instructions provided for Getting Started with Trilio for Upstream Kubernetes (K8S)environments for installing T4K into EKS clusters.

As part of both types of installations, it installs -

Trilio for Kubernetes Operator is installed in the

tvknamespaceTrilio for Kubernetes Manager is installed in the

tvknamespaceTrilio ingress is configured to access the T4K Management UI. Refer toConfiguring the UI.

Follow the step-by-step instructions below to install T4K from the AWS marketplace:

1. Trilio for Kubernetes (Long-Term Contractual Pricing)

Search for

Trilioon the AWS Marketplace and selectTrilio for Kubernetesapplication offer.

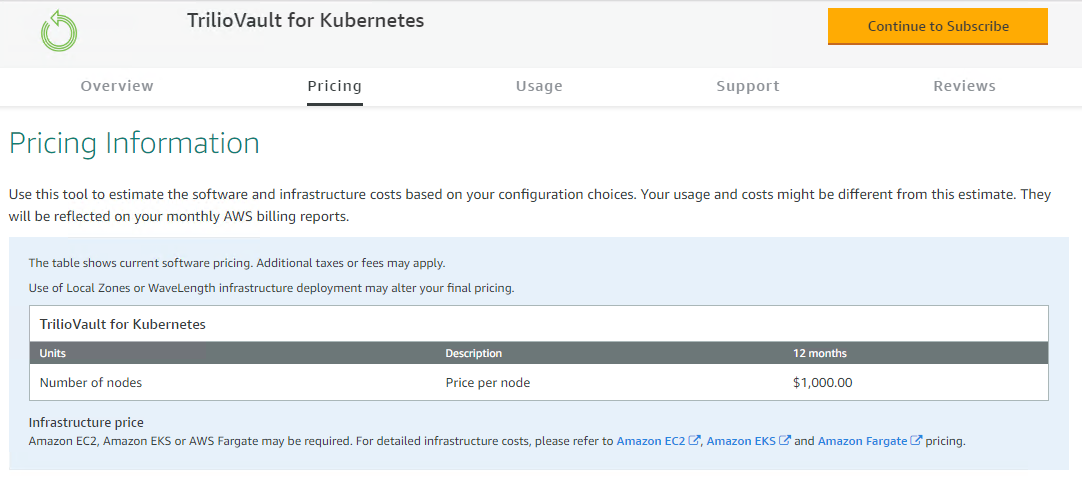

This offer is built for the long-term contractual license. It is valid for one year with the price of $1000 per node (By default one node is considered as 4 vCPUs.)

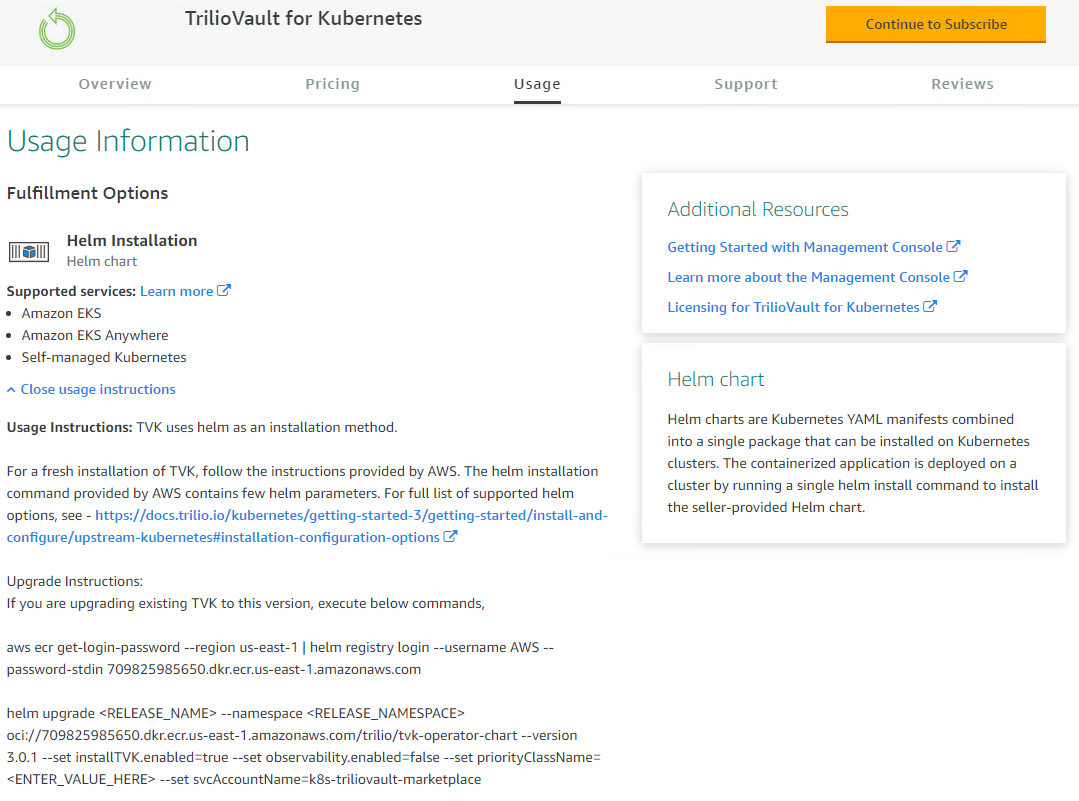

Helm chart is used to perform the product installation. The user can install the product on the existing EKS cluster or use Cloud Formation Template (CFT) to automatically create a new EKS cluster with T4K installed on it.

After T4K is installed, the user can apply the license they have acquired from the Trilio Professional Services and Solutions Architecture team.

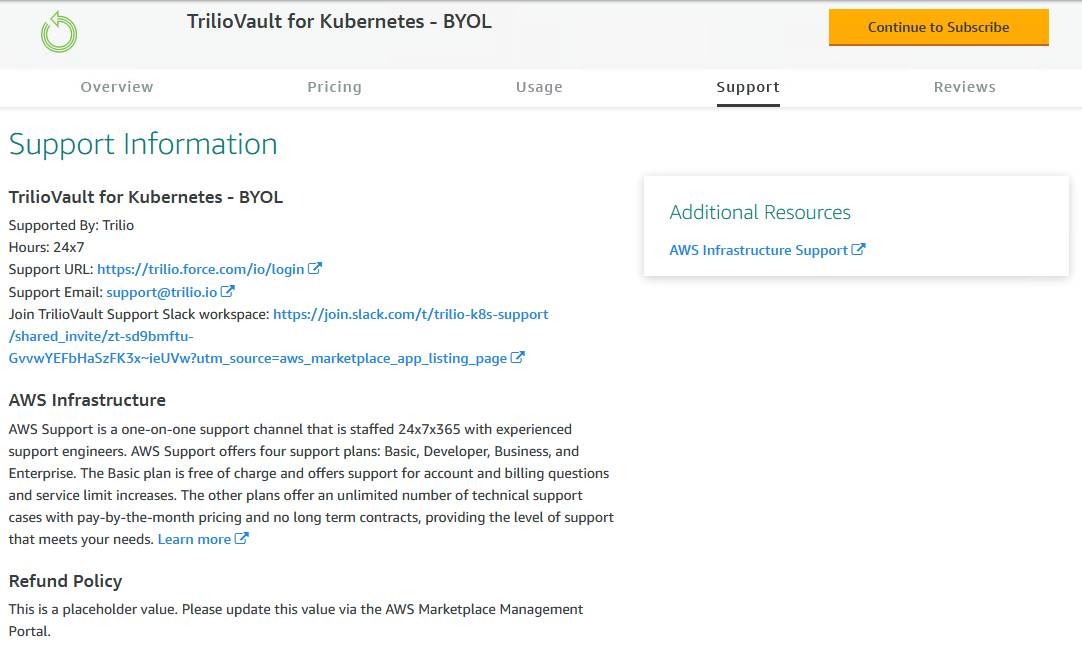

If user faces any issues they can contact the Support team using the information present in the Support tab.

Click on the

Continue to Subscribebutton from the product listing page.\

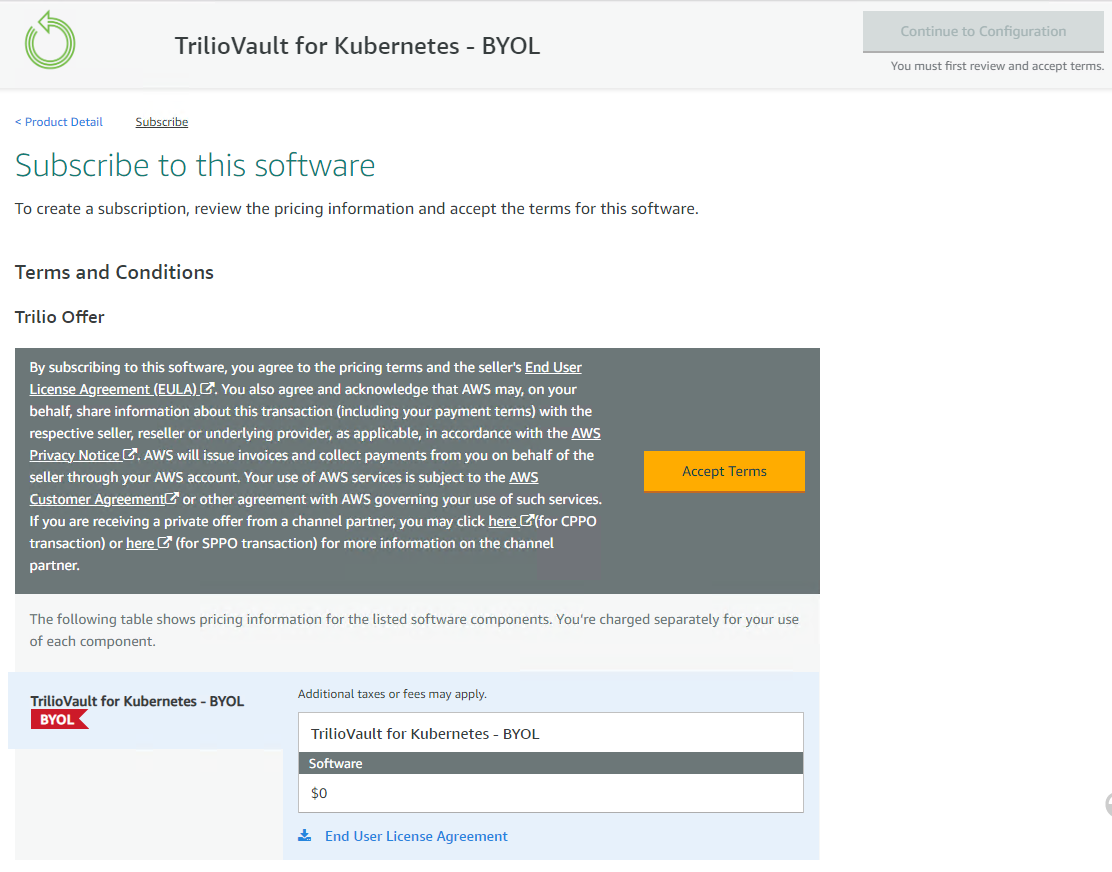

Verify that the BYOL offer price is mentioned as $0 and click on

Accept Termsbutton to proceed.

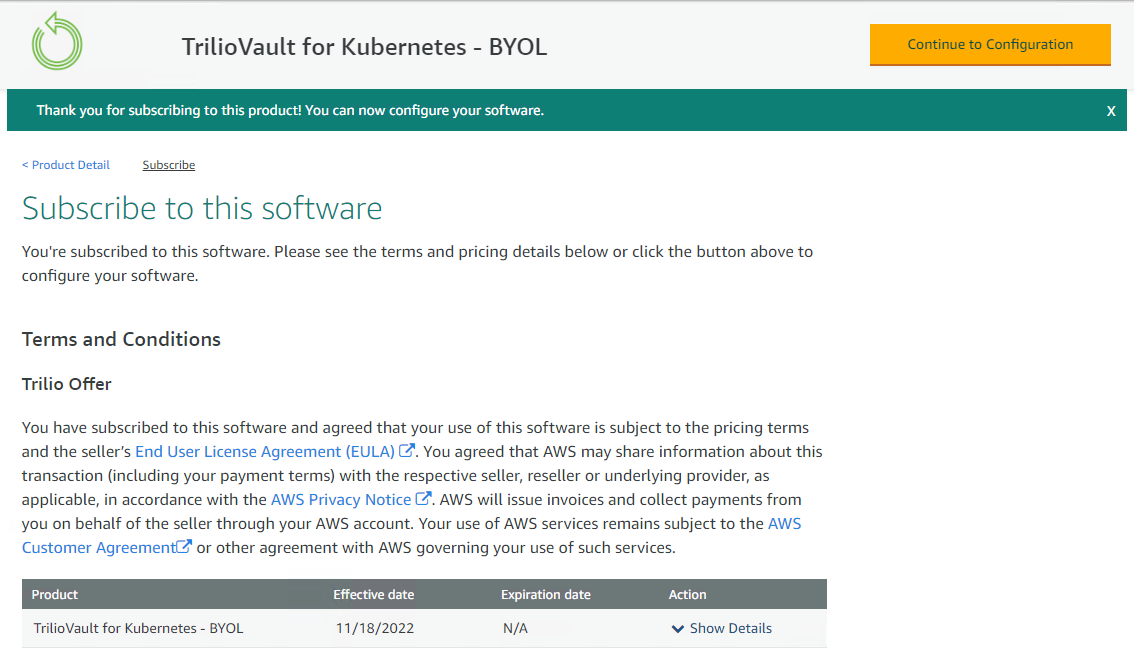

Once the terms are accepted, the

Effective Datewill be updated in the offer. Now, click on theContinue to Configurationbutton to proceed with the installation commands.

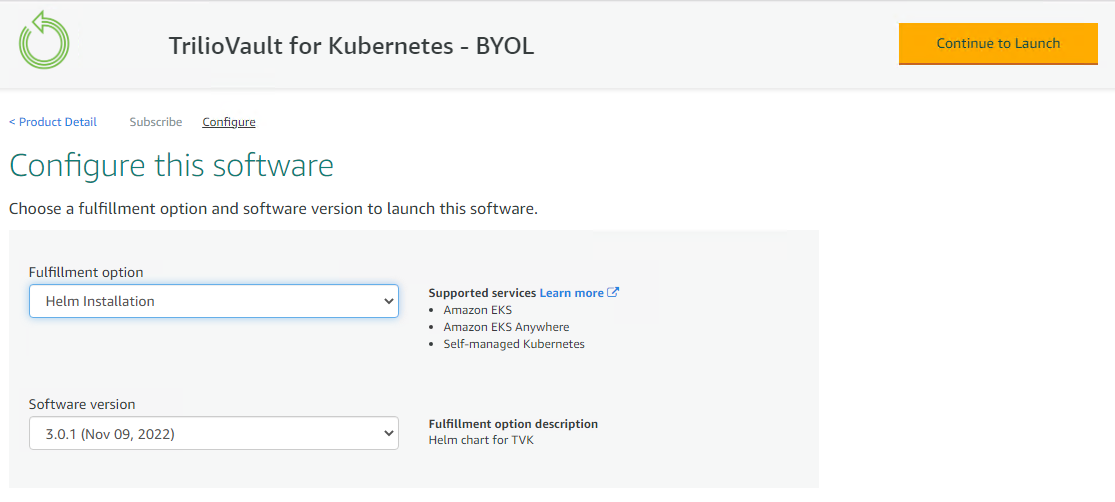

Choose the

Helm InstallationasFulfilment optionand select the desiredSoftware versionfrom the listed versions. Click on theContinue to Launchbutton.

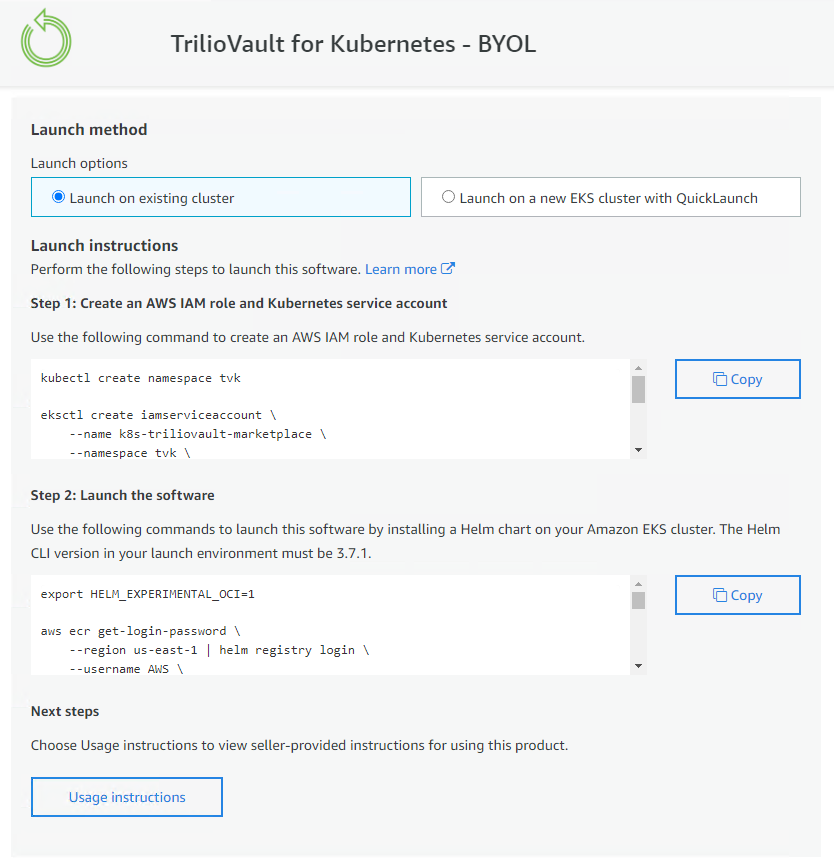

In the

Launch methodyou can select from two options-Launch on existing cluster -

Install T4K on your existing EKS cluster

Login to the existing EKS cluster through CLI and connect to AWS through awscli.

Follow the commands to create the

AWS IAM roleandKubernetes Service Accounton AWSFollow the command under

Launch the Softwaresection to pull the helm chart and install the product.

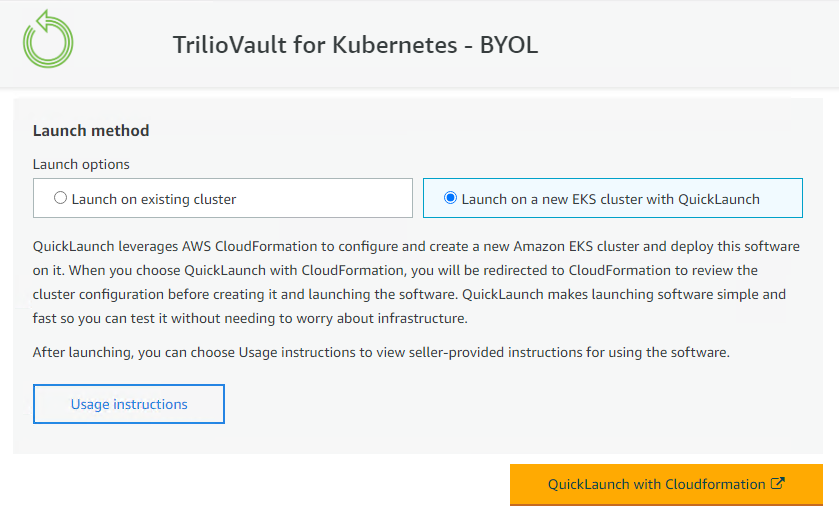

Launch a new EKS cluster with QuickLaunch -

Click on the

QuickLaunch with Cloudformationto trigger the template deployment.

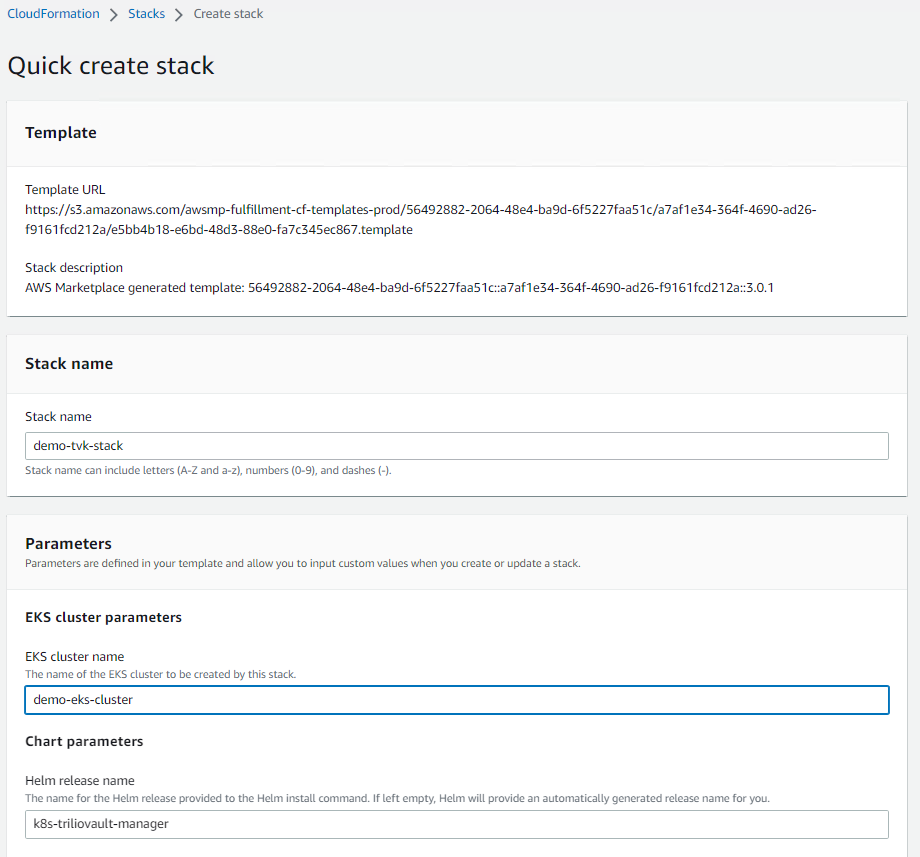

Provide the

Stack nameandEKS cluster nameto create the stack.

Click on the

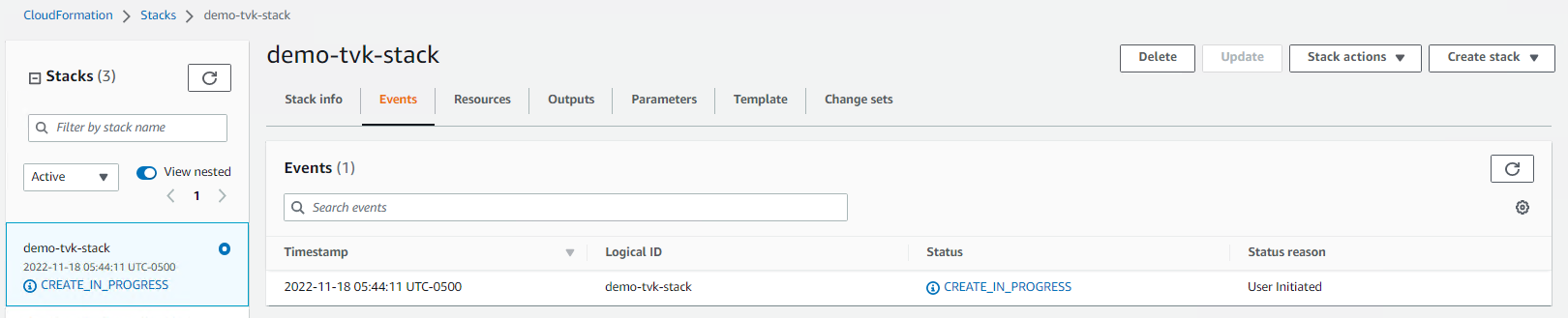

Create stackbutton at the button to start the stack deployment.

Authentication

The T4K user interface facilitates authentication through kubeconfig files, which house elements such as tokens, certificates, and auth-provider information. However, in some Kubernetes cluster distributions, the kubeconfig might include cloud-specific exec actions or auth-provider configurations to retrieve the authentication token via the credentials file. By default, this is not supported.

When using kubeconfig on the local system, any cloud-specific action or config in the user section of the kubeconfig will seek the credentials file in a specific location. This allows the kubectl/client-go library to generate an authentication token for use in authentication. However, when the T4K Backend is deployed in the Cluster Pod, the credentials file necessary for token generation is not accessible within the Pod.

To rectify this, T4K features cloud distribution-specific support to manage and generate tokens from these credential files.

Using credentials for login

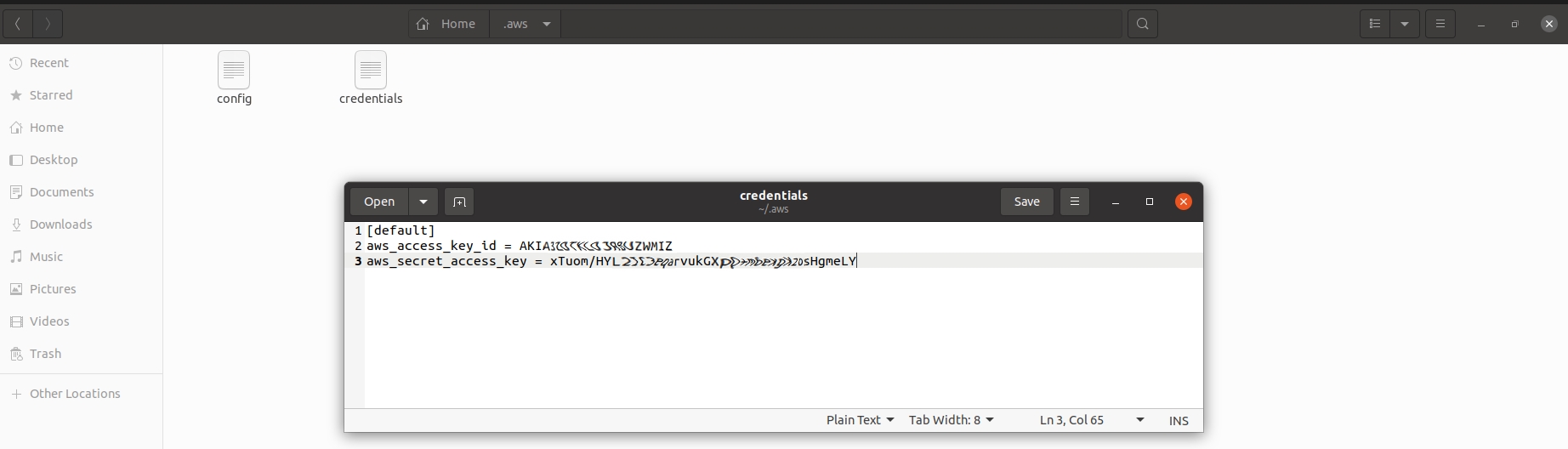

In an EKS cluster, a local binary known as aws (aws-cli) is used pull the credentials from a file named credentials.

This file is located under the path $HOME/.aws and is used to generate an authentication token.

When a user attempts to log into the T4K user interface deployed in an EKS cluster, they are expected to supply the credentials file from the location $HOME/.aws for successful authentication.

Example of Default kubeconfig

Example of Credentials pulled from credentials

Access Entry/ Auth Configmap configurations to support impersonation

T4K uses user impersonation in admission webhooks to validate that users have access to referenced resources (namespaces, secrets, other T4K objects) when creating or modifying T4K resources. This prevents privilege escalation by ensuring operations respect user permissions rather than using T4K's elevated service account privileges.

When using EKS clusters, T4K webhooks may fail during user impersonation if users are not properly configured with Kubernetes groups. This applies to both Access Entries (newer method) and aws-auth ConfigMap (legacy method). This section explains how to properly configure EKS authentication to ensure T4K operates correctly.

For impersonation to work in EKS, users must have either:

Kubernetes Groups - Through Access Entries or aws-auth ConfigMap

Direct ClusterRoleBinding - Binding permissions directly to user ARN

EKS Authentication Methods

Method 1: Access Entries (Recommended)

EKS Access Entries can grant permissions via:

Access Policies : This is AWS managed authorization, it works for direct API calls but doesn't work for kubernetes impersonation.

Kubernetes Groups : This is kubernetes native authorization, since it is kubernetes native it allows T4k to impersonate the user in order to perform certain actions on their behalf.

Configure Access Entry with Kubernetes Groups:

Limitations:

EKS Access Entries do not allow groups with system:* prefix. You cannot use system:masters, system:authenticated. Custom group names like tvk-users, tvk-admins, cluster-admins need to be created that will be assciated with the access entry

Method 2: aws-auth ConfigMap (Legacy)

If using the aws-auth ConfigMap approach, users must be configured with groups:

This configuration will not work for T4K impersonation if the user doesn't have direct clusterRoleBinding associated:

Required Kubernetes RBAC Configuration

Regardless of which authentication method you use, create the necessary RBAC:

Alternative: Direct User Binding (Not Recommended)

If you cannot use groups, you can create a direct ClusterRoleBinding to the user ARN, in this case no group configuration is needed in Access Entry/ aws-auth Configmap. This approach is discouraged since it's hard to manage direct user binding for each user and doesn't scale with increasing users.

Installation Methods

Licensing Trilio for Kubernetes

To generate and apply the Trilio license, perform the following steps:

Although a cluster license enables Trilio features across all namespaces in a cluster, the license only needs to be applied in the namespace where Trilio is installed. For example, trilio-system namespace.

1. Obtain a license by getting in touch with us here. The license file will contain the license key.

2. Apply the license file to a Trilio instance using the command line or UI:

Execute the following command:

2. If the previous step is successful, check that the output generated is similar to the following:

Additional license details can be obtained using the following:

kubectl get license -o json -m trilio-system

Prerequisites:

Authenticate access to the Management Console (UI). Refer to .

Configure access to the Management Console (UI). Refer to Configuring the UI.

If you have already executed the above prerequisites, then refer to the guide for applying a license in the UI: Actions: License Update

Upgrading a license

A license upgrade is required when moving from one license type to another.

Trilio maintains only one instance of a license for every installation of Trilio for Kubernetes.

To upgrade a license, run kubectl apply -f <licensefile> -n <install-namespace> against a new license file to activate it. The previous license will be replaced automatically.

Create a Target

The Target CR (Customer Resource) is defined from the Trilio Management Console or from your own self-prepared YAML.

The Target object references the NFS or S3 storage share you provide as a target for your backups/snapshots. Trilio will create a validation pod in the namespace where Trilio is installed and attempt to validate the NFS or S3 settings you have defined in the Target CR.

Trilio makes it easy to automatically create your Target CR from the Management Console.

Learn how to Create a Target from the Management Console

Take control of Trilio and define your own self-prepared YAML and apply it to the cluster using the oc/kubectl tool.

Example S3 Target

See more Example Target YAML

Testing Backup, Snapshot and Restore Operation

Trilio is a cloud-native application for Kubernetes, therefore all operations are managed with CRDs (Custom Resource Definitions). We will discuss the purpose of each Trilio CRs and provide examples of how to create these objects Automatically in the Trilio Management Console or from the oc/kubectl tool.

About Backup Plans

The Backup Plan CR is defined from the Trilio Management Console or from your own self-prepared YAML.

The Backup Plan CR must reference the following:

Your Application Data (label/helm/operator)

BackupConfig

Target CR

Scheduling Policy CR

Retention Policy CR

SnapshotConfig

Target CR

Scheduling Policy CR

Retention Policy CR

A Target CR is defined from the Trilio Management Console or from your own self-prepared YAML. Trilio will test the backup target to insure it is reachable and writable. Look at Trilio validation pod logs to troubleshoot any backup target creation issues.

Retention and Schedule Policy CRs are defined from the Trilio Management Console or from your own self-prepared YAML.

Scheduling Policies allow users to automate the backup/Snapshot of Kubernetes applications on a periodic basis. With this feature, users can create a scheduling policy that includes multiple cron strings to specify the frequency of backups.

Retention Policies make it easy for users to define the number of backups/snapshots they want to retain and the rate at which old backups/snapshots should be deleted. With the retention policy CR, users can use a simple YAML specification to define the number of backups/snapshots to retain in terms of days, weeks, months, years, or the latest backup/snapshots. This provides a flexible and customizable way to manage your backup/snapshots retention policy and ensure you meet your compliance requirements.

The Backup and Snapshot CR is defined from the Trilio Management Console or from your own self-prepared YAML.

The backup/snapshot object references the actual backup Trilio creates on the Target. The backup is taken as either a Full or Incremental backup as defined by the user in the Backup CR. The snapshpt is taken as Full snapshot only.

Creating a Backup Plan

Trilio makes it easy to automatically create your backup plans and all required target and policy CRDs from the Management Console.

Take control of Trilio, define your self-prepared YAML, and apply it to the cluster using the oc/kubectl tool.

Example Namespace Scope BackupPlan:

Target in the backupConfig and snapshotConfig needs to be the same. User can specify different retention and schedule policies under backupConfig and snapshotConfig.

See more Examples of Backup Plan YAML

Creating a Backup

Learn more about Creating Backups from the Management Console

Creating a Snapshot

Learn more about Creating Snapshots from the Management Console

About Restore

A Restore CR (Custom Resource) is defined from the Trilio Management Console or from your own self-prepared YAML. The Restore CR references a backup object which has been created previously from a Backup CR.

In a Migration scenario, the location of the backup/snapshot should be specified within the desired target as there will be no Backup/Snapshot CR defining the location. if you are migrating Snapshot then make sure that then then actual Persistent Volume snapshots are accessible from the other cluster.

Trilio restores the backup/snapshot into a specified namespace and upon completion of the restore operation, the application is ready to be used on the cluster.

Creating a Restore

Trilio makes it easy to automatically create your Restore CRDs from the Management Console.

Learn more about Creating Restores from the Management Console

Take control of Trilio, define your self-prepared YAML, and apply it to the cluster using the oc/kubectl tool.

See more Examples of Restore YAML

Troubleshooting

Problems? Learn about Troubleshooting Trilio for Kubernetes