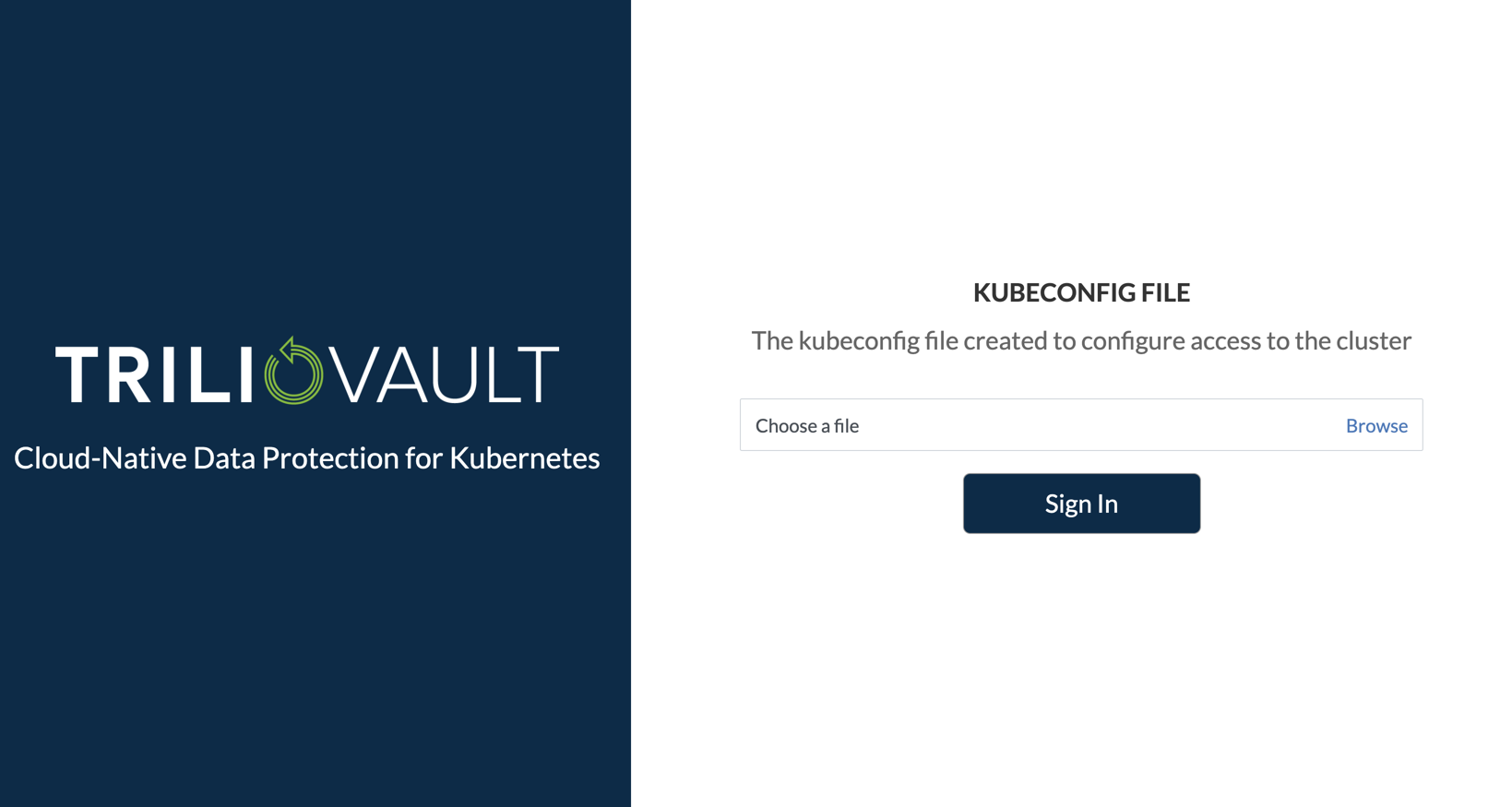

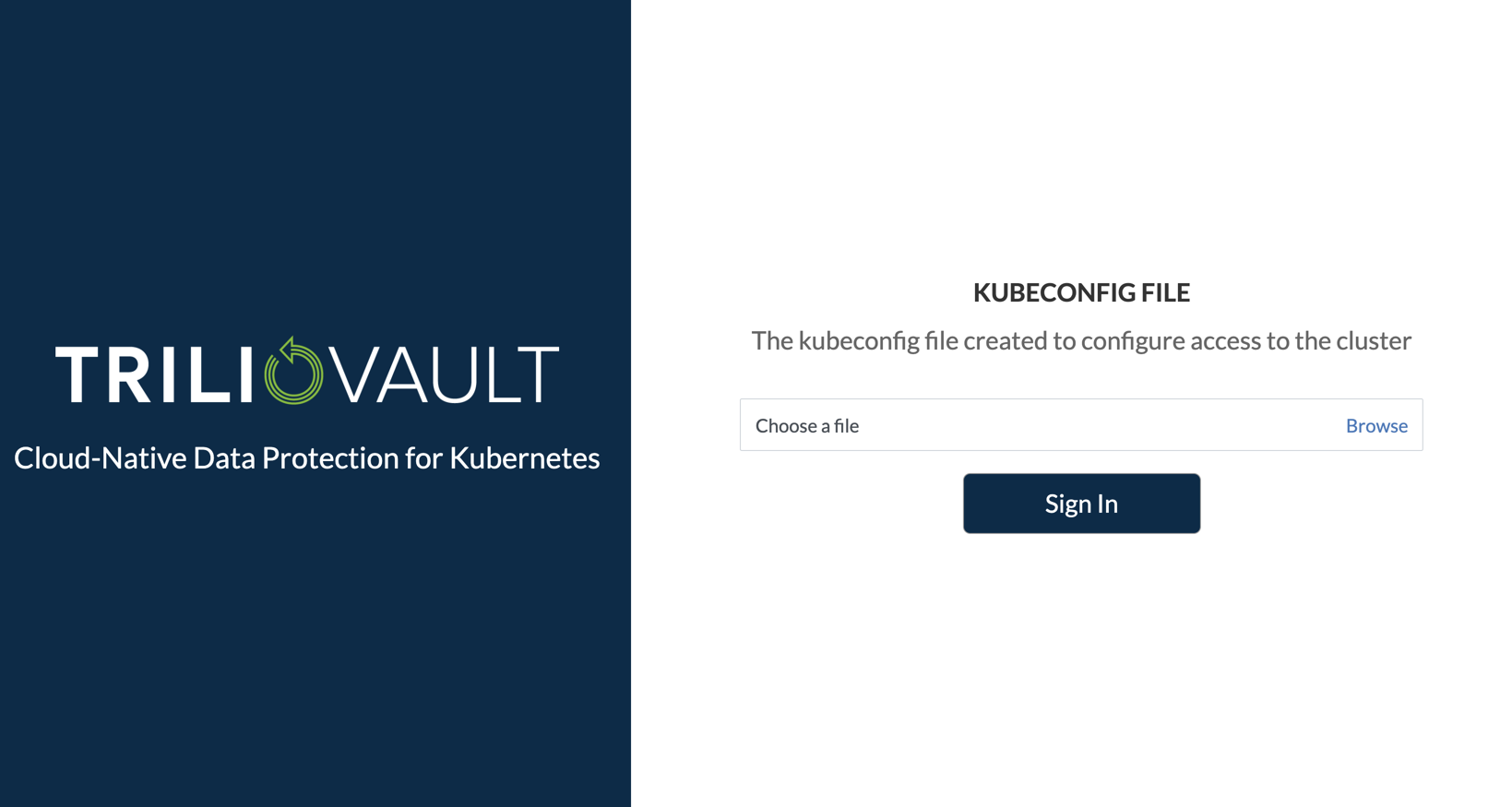

KubeConfig Authenticaton

This page describes authenticating to the Trilio Management Console via a KubeConfig file

This page describes authenticating to the Trilio Management Console via a KubeConfig file

apiVersion: v1

kind: ServiceAccount

metadata:

name: svcs-acct-dply #any name you'd likekubectl create -f sa.yamlapiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: svcs-acct-sa-token

annotations:

kubernetes.io/service-account.name: svcs-acct-dplykubectl create -f sa-secret.ymlkubectl describe secrets svcs-acct-dply-token Name: svcs-acct-dply-token

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name=svcs-acct-dply

kubernetes.io/service-account.uid=c2117d8e-3c2d-11e8-9ccd-42010a8a012f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1115 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby

9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InNoaXBwYW

JsZS1kZXBsb3ktdG9rZW4tN3Nwc2oiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoic2hpcHBhYmxlLW

RlcGxveSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImMyMTE3ZDhlLTNjMmQtMTFlOC05Y2NkLTQyMD

EwYThhMDEyZiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OnNoaXBwYWJsZS1kZXBsb3kifQ.ZWKrKdpK7aukTRKnB5SJwwov6Pj

aADT-FqSO9ZgJEg6uUVXuPa03jmqyRB20HmsTvuDabVoK7Ky7Uug7V8J9yK4oOOK5d0aRRdgHXzxZd2yO8C4ggqsr1KQsfdlU4xRWglaZGI4S31ohCAp

J0MUHaVnP5WkbC4FiTZAQ5fO_LcCokapzCLQyIuD5Ksdnj5Ad2ymiLQQ71TUNccN7BMX5aM4RHmztpEHOVbElCWXwyhWr3NR1Z1ar9s5ec6iHBqfkp_s

8TvxPBLyUdy9OjCWy3iLQ4Lt4qpxsjwE4NE7KioDPX2Snb6NWFK7lvldjYX4tdkpWdQHBNmqaD8CuVCRdEQ kubectl config view --flatten --minify > cluster-cert.txt

cat cluster-cert.txt apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURDekNDQWZPZ0F3SUJBZ0lRZmo4VVMxNXpuaGRVbG

15a3AvSVFqekFOQmdrcWhraUc5dzBCQVFzRkFEQXYKTVMwd0t3WURWUVFERXlSaVl6RTBOelV5WXkwMk9UTTFMVFExWldFdE9HTmlPUzFrWmpSak5tU

XlZemd4TVRndwpIaGNOTVRnd05EQTVNVGd6TVRReVdoY05Nak13TkRBNE1Ua3pNVFF5V2pBdk1TMHdLd1lEVlFRREV5UmlZekUwCk56VXlZeTAyT1RN

MUxUUTFaV0V0T0dOaU9TMWtaalJqTm1ReVl6Z3hNVGd3Z2dFaU1BMEdDU3FHU0liM0RRRUIKQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURIVHFPV0ZXL09

odDFTbDBjeUZXOGl5WUZPZHFON1lrRVFHa3E3enkzMApPUEQydUZyNjRpRXRPOTdVR0Z0SVFyMkpxcGQ2UWdtQVNPMHlNUklkb3c4eUowTE5YcmljT2

tvOUtMVy96UTdUClI0ZWp1VDl1cUNwUGR4b0Z1TnRtWGVuQ3g5dFdHNXdBV0JvU05reForTC9RN2ZpSUtWU01SSnhsQVJsWll4TFQKZ1hMamlHMnp3W

GVFem5lL0tsdEl4NU5neGs3U1NUQkRvRzhYR1NVRzhpUWZDNGYzTk4zUEt3Wk92SEtRc0MyZAo0ajVyc3IwazNuT1lwWDFwWnBYUmp0cTBRZTF0RzNM

VE9nVVlmZjJHQ1BNZ1htVndtejJzd2xPb24wcldlRERKCmpQNGVqdjNrbDRRMXA2WXJBYnQ1RXYzeFVMK1BTT2ROSlhadTFGWWREZHZyQWdNQkFBR2p

JekFoTUE0R0ExVWQKRHdFQi93UUVBd0lDQkRBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFCQwpHWWd0R043SH

JpV2JLOUZtZFFGWFIxdjNLb0ZMd2o0NmxlTmtMVEphQ0ZUT3dzaVdJcXlIejUrZ2xIa0gwZ1B2ClBDMlF2RmtDMXhieThBUWtlQy9PM2xXOC9IRmpMQ

VZQS3BtNnFoQytwK0J5R0pFSlBVTzVPbDB0UkRDNjR2K0cKUXdMcTNNYnVPMDdmYVVLbzNMUWxFcXlWUFBiMWYzRUM3QytUamFlM0FZd2VDUDNOdHJM

dVBZV2NtU2VSK3F4TQpoaVRTalNpVXdleEY4cVV2SmM3dS9UWTFVVDNUd0hRR1dIQ0J2YktDWHZvaU9VTjBKa0dHZXJ3VmJGd2tKOHdxCkdsZW40Q2R

jOXJVU1J1dmlhVGVCaklIYUZZdmIxejMyVWJDVjRTWUowa3dpbHE5RGJxNmNDUEI3NjlwY0o1KzkKb2cxbHVYYXZzQnYySWdNa1EwL24KLS0tLS1FTk

QgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://35.203.181.169

name: gke_jfrog-200320_us-west1-a_cluster

contexts:

- context:

cluster: gke_jfrog-200320_us-west1-a_cluster

user: gke_jfrog-200320_us-west1-a_cluster

name: gke_jfrog-200320_us-west1-a_cluster

current-context: gke_jfrog-200320_us-west1-a_cluster

kind: Config

preferences: {}

users:

- name: gke_jfrog-200320_us-west1-a_cluster

user:

auth-provider:

config:

access-token: ya29.Gl2YBba5duRR8Zb6DekAdjPtPGepx9Em3gX1LAhJuYzq1G4XpYwXTS_wF4cieZ8qztMhB35lFJC-DJR6xcB02oXX

kiZvWk5hH4YAw1FPrfsZWG57x43xCrl6cvHAp40

cmd-args: config config-helper --format=json

cmd-path: /Users/ambarish/google-cloud-sdk/bin/gcloud

expiry: 2018-04-09T20:35:02Z

expiry-key: '{.credential.token_expiry}'

token-key: '{.credential.access_token}'

name: gcpapiVersion: v1

kind: Config

users:

- name: svcs-acct-dply

user:

token: <replace this with token info>

clusters:

- cluster:

certificate-authority-data: <replace this with certificate-authority-data info>

server: <replace this with server info>

name: self-hosted-cluster

contexts:

- context:

cluster: self-hosted-cluster

user: svcs-acct-dply

name: svcs-acct-context

current-context: svcs-acct-context apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: svcs-role

rules:

- apiGroups: ["triliovault.trilio.io"]

resources: ["*"]

verbs: ["get", "list"]

- apiGroups: ["triliovault.trilio.io"]

resources: ["policies"]

verbs: ["create"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"] apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: sample-clusterrrolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: svcs-role

subjects:

- kind: ServiceAccount

name: svcs-acct-dply

namespace: default