Architecture and Concepts

This section describes continuous restore functionality

Introduction

One of the main features of T4K 3.0.0 was continuous restore, where users can stage backups to remote clusters as and when a new backup is available. Users can recover applications instantly on remote clusters in case of a disaster. Users can also test the restore regularly to gain confidence in their DR plan.

The continuous restore feature is implemented as a cloud-native service taking multi-cluster and multi-cloud environments into consideration. It is designed to be flexible enough to support a fabric topology where any application in any Kubernetes cluster can designate any other cluster in the fabric as a restore site. It does not make any assumptions about the connectivity between the clusters. The only requirement is that the backup target must be shared and accessible between the source and target clusters.

Note: Ensure all clusters are deployed with the same version of T4K for consistent operations.

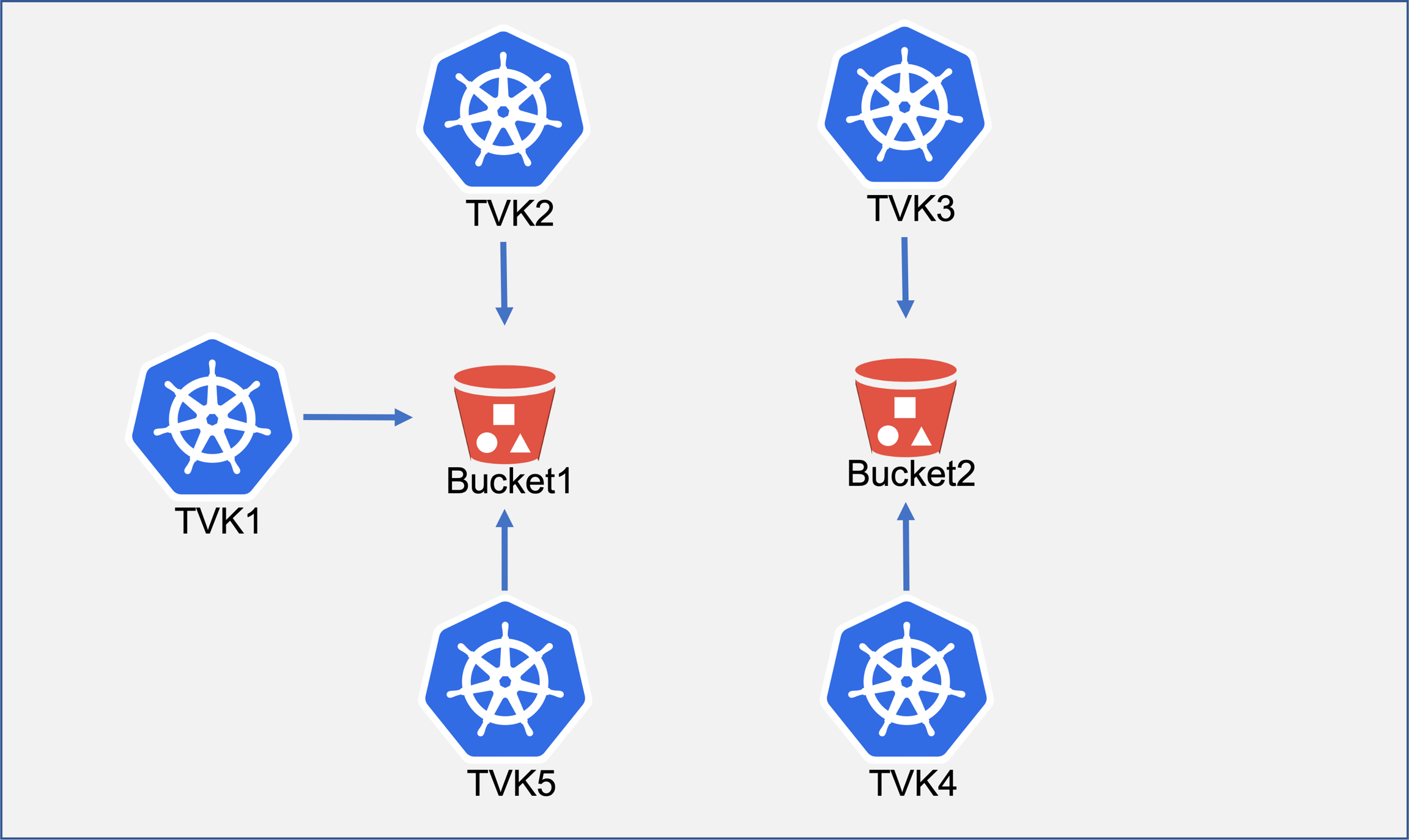

As shown in the above diagram, TVK1, TVK2 and TKV5 are configured to use Bucket1 as a backup target. So a backup plan of an application in TVK1 can designate TVK2 and TVK5 as remote sites for continuous restoration. Likewise, a backup plan of an application on TVK3 can designate TVK4 as a remote site.

The continuous restore only stages the persistent volumes’ data onto remote clusters as PVs. The set of PVs created as part of the restore process is called a consistent set. A consistent set is a collection of PVs that holds the application data and is guaranteed to start the application successfully. Once a consistent set is created from backup data, it cannot be modified but can only be deleted. The number of PVs matches the number of PVs of the application backup. Every backup of the application results in a new consistent set that matches the number of PVs in the backup. Each consistent set of an application may have the same or different number of PVs but only depends on the number of PVs of the corresponding backup. Each consistent set is independent of the other; any consistent set can be deleted without affecting the other consistent sets of the application. The continuous restore policy in the backup plan at the source cluster determines the number of consistent sets created at the remote cluster.

The continuous restore feature is enabled per a backup plan. Users may choose to create consistent sets on one or more remote clusters and may choose to keep different numbers of consistent sets on each cluster. An example of a backup plan with a continuous restore feature is given below:

Assuming that the backup plan sample-backupplan is created on TVK1, it designates TVK2 and TVK5 as remote sites for continuous restore. TVK2 holds four consistent sets, and TVK5 holds six consistent sets. Once the user updates the backup plan spec, TVK2 and TVK5 get notified and create consistent sets from the latest backups. Once consistent sets are created at remote sites, TVK1 gets notified, and the backup plan spec is updated with the actual number of consistent sets on remote sites.

The backup plan with consistent sets on remote clusters is shown below:

Architecture

T4K’s continuous restore feature includes a collection of services deployed on each Kubernetes cluster.

Event Target

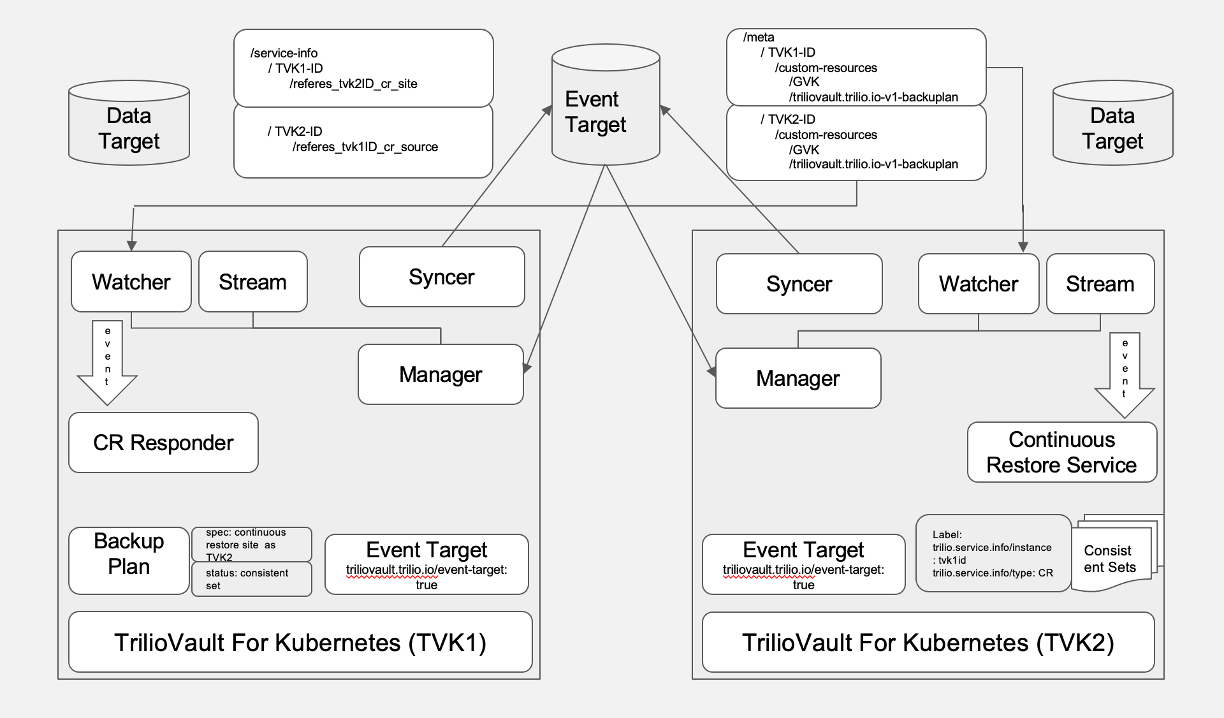

The event target is any other backup target. T4K uses the event target to exchange state information of continuous restore between clusters. Clusters that participate in continuous restore only rely on the state stored in the event target. T4K makes no other assumptions regarding connectivity between clusters. All clusters participating in continuous restore must have the same backup target configured as the event target. When a new event target resource is created, the target controller spawns two new services syncher and service manager.

Syncher

The primary purpose of the syncher module is to persist the T4K resources state to the event target and make sure the persistent state is in sync with the T4K resources. Syncher module runs on every T4K where an event target is configured. Syncher functionality is cluster-specific and is only responsible for synchronizing the T4K resources state on the cluster with the corresponding persistent state on the event target.

When a user creates an event target, the target controller validates the target. It then creates a Syncer deployment, a service manager deployment, and a resourceList config for the ET.

Syncher's main objectives are:

Store the current state in the cluster onto a target (NFS/ObjectStore). Here state refers to all T4K-related Custom Resources (CR) present in the etcd database.

It also populates

service-infowith information related to the continuous restore service.Storing, updating, and maintaining the current state of its instance on the target

Syncher on each cluster manages two blobs of persistent state:

meta

The meta-directory includes all YAML files of all the resources that T4K manages. These include Policy, Hook, Target, BackupPlan, Backup, and ConsistentSets.

service-info

As the name suggests, service-info includes information about a particular T4K service that the current T4K resources are referencing. For example, a backupplan1 on TVK1 references TVK2 as a remote site consistent set. The syncher on TVK1 records the information that TVK1 references TVK2. Syncher on each cluster also records the heartbeat, so other clusters participating in the service know the health of the continuous restore service.

Service Manager

The service manager module manages the lifecycle of the watcher and continuous restore service stack. It monitors the service-info directory present on the event target and checks if its parent instanceID is present in any of the discovered files that start with the keyword refers, e.g., refers-instanceID-continuous-restore-site

When the service manager finds a valid record, it spawns appropriate services on the cluster. The service manager also updates the configmap prepared for the watcher service stack to update the instanceID to be monitored.

Watcher

The Watcher is responsible for monitoring the resource state changes on the event target and generating corresponding events on the host cluster.

Prerequisites

The user must configure the same event target between the clusters.

The source cluster’s syncher service must create/update the persistent state of T4K resources on the event target.

Initiating Watcher Service

The service manager is responsible for starting and stopping the watcher service.

The service manager starts the watcher service after it discovers its T4K is referred to as a service in another T4K on the same or different cluster.

The event target controller creates a configMap

<target_name>-resource-list/<target_name>-<target_ns>-resource-list, which is shared between the manager, syncher, and watcher service.The manager service initiates the watcher service only if at least one T4K instance ID is to be watched on the event target.

Mounting Event Target

Once the watcher service starts, it mounts the event target inside the watcher container and reads from the event target.

Watcher uses a datastore-attacher for S3-based targets and NFS PVs for NFS-based targets.

Adding Watch on New Instance(s)

Configuring the watcher service to watch a new instance ID is done by the manager service through configMap

<target_name>-resource-list/<target_name>-<target_ns>-resource-listwhere<target_name>is event target name and<target_ns>is event target namespace.configMap

<target_name>-resource-listcontains two keysThe service manager manages the manager-state key.

The watcher service manages the watcher-state key.

Manager-state holds comma-separated instance IDs, in which the service manager services the watcher to add a watch.

Watcher-state holds comma-separated instance IDs, which the Watcher service is watching now.

Structure of ConfigMap

<target_name>-resource-list/<target_name>-<target_ns>-resource-list

A sample configMap looks as follows:

NATS Server

The watcher service collects state changes from the event target on the local cluster and publishes these as events in the NATS server so that consumer services can consume them as required. NATS service is used with a watcher, syncher, and manager to enable cross-cluster communication.

This section covers the approach NATS service uses to receive, store and publish events generated by watchers and push them to local services like continuous restore service and continuous restore responder according to their subscriptions.

Initiating NATS service

The service manager is responsible for starting and stopping NATS service. T4K only uses NATS Jetstream functionality

The service manager starts the NATS service once it discovers that it needs to perform some operations for other triliovault instances.

NATS service is initiated by the service manager only if at least one instance ID is to be watched by the watcher service from the event target

Publishing Events to NATS

The watcher service generates events, creates NATS-client, and pushes events to the NATS service.

The watcher will create only one NATS stream on the NATS server, which can have one or more NATS subjects depending on the instance(s).

Event Generation Control Flow

The watcher service creates a NATS jetstream client and publishes events to the server.

The NATS client checks if a stream named INSTANCES is present on the NATS server

If the stream is not present, it will create an event retention policy set to 1 day so that every event on this stream will automatically get deleted after one day.

Consuming Events from NATS Server

NATS events consumers are services like continuous restore service and continuous restore responder

Continuous Restore Service/Responder

The service manager enables continuous restore services when it finds its instance ID reference in the service-info directory on the event target. The continuous restore services mount the event target and subscribe to the events in the NATS jetstream for the instances. It starts processing the events it receives from the NATS.

The continuous restore services main objectives are:

Process the ContinuousRestore Policy, Target, Backupplan, ClusterBackupPlan, Backup, and ClusterBackup events that need ContinuousRestore service and create the required CRs like Data Target, Policy, ContinuousRestorePlan, ConsistentSet.

Synchronize between the ContinuousRestoreConfig in the Backupplan, ClusterBackupplan, and the current state in the destination cluster.

Continuous restore services operations are idempotent, so even after restart, it will start processing the event from where it left off and ignore already processed events. It determines the work to do based on the events from NATS and the current state of the cluster.