Known Issues and Workarounds

This page describes issues that may be encountered and potential solutions to those issues.

Management Console not accessible using NodePort

If user has done all the configuration mentioned as per the Accessing the Management Console instructions and still not able to access the UI, check the Firewall Rules for the Kubernetes cluster nodes.

Here is an example to check firewall rules on the Google GKE cluster:

Search the VPC Network on GCP web console

Select `Firewall` option from the left pane

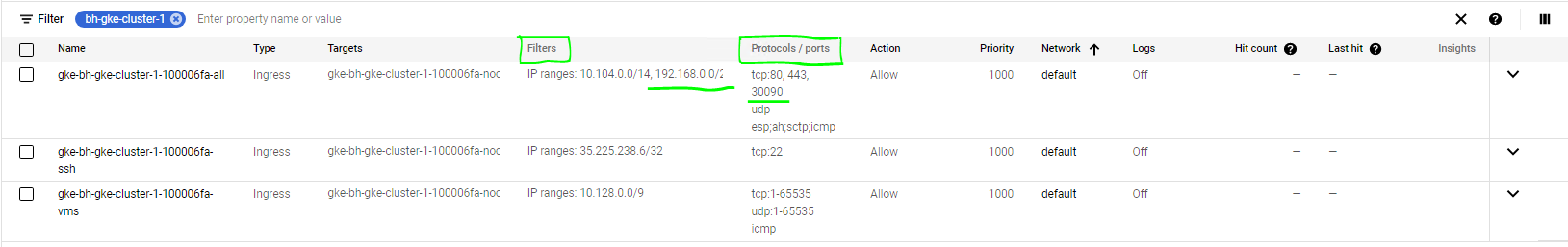

Search the rules with your Kubernetes cluster name

In the list of firewalls, verify:

Filters column - Shows the source IP which can access the T4K Management Console Hostname and

NodePortProtocols/Ports column - Shows the ports which are accessible on the cluster nodes.

Verify that the

NodePortassigned to servicek8s-triliovault-ingress-gatewayis added in the firewall rules Protocols and Ports.

Target Creation Failure

kubectl apply -f <backup_target.yaml> is not successful and the resource goes into Unavailable state.

Possible reasons for the target marked Unavailable could be not having enough permissions or lack of network connectivity. Target resource creation launches a validation pod to check if Trilio can connect and write to the Target. Trilio then cleans the pod after the validation process completes, which clears any logs that the validation pod has. Users should repeat the same operation and actively check the logs on the validation pods.

Kubernetes

OpenShift

Backup Failures

Please follow the following steps to analyze backup failures.

To troubleshoot backup issues, it is important to know the different phases of jobs.triliovault pod.

<backupname>-snapshot: Trilio leverages CSI snapshot functionality to take PV snapshots. If the backup fails in this step user should try a manual snapshot of the PV to make sure all required drivers are present, and that this operation works without Trilio.<backupname>-upload: In this phase, Trilio uploads metadata and data to the Target. If the backup fails in this phase, we need to look into the following logs for error.

Kubernetes

OpenShift

Restore Failures

The most common reasons for restore failures are resource conflicts. Users may try to restore the backup to the same namespace where the original application and restored application have names conflict. Please check the logs to analyze the root cause of the issue.

Kubernetes

OpenShift

Webhooks are installed

Problem Description: If a Trilio operation is failing with an error Internal error occurred: failed or service "k8s-triliovault-webhook-service" not found

Solution : This might be due to the installation of T4K in multiple Namespaces. Even a simple clean-up of those installations will not have webhook instances cleared. Please run the following commands to get all the webhooks instances.

Delete duplicate entries if any.

Restore Failed

Problem Description: If you are not able to restore an application into different namespace (OpenShift)

Solution: User may not have sufficient permissions to restore the application into the namespace.

OOMKilled Issues

Sometimes users may notice some Trilio pods go into OOMKilled state. This can happen in environments where a lot of objects exist for which additional processing power may be needed. In such scenarios, the memory and CPU limits can be bumped up by running the following commands:

Kubernetes

Please follow the API documentation to increase resource limits for Trilio pods deployed via the upstream Helm based Operator

OCP

Within OCP, the memory and CPU values for Trilio are managed and controlled by the Operator ClusterServiceVersion(CSV)Users can either edit the Operator CSV YAML directly from within the OCP console or can patch the pods based on the snippets provided below.

The following snippet shows how to increase the memory value to 2048Mi for the web-backend pod

The following snippet shows how to increase the CPU value to 800M for the web-backend pod

After performing this workaround you will see two k8s-triliovault-web-backend pods because the replica set will be set to 2. This happens because Trilio sets therevisionhistoryto 1. Any changes to the csv and/or deployment will leave behind an extra replicaset. This can be resolved by manually deleting the extra replicaset or by setting revisionhistory to 0.

Uninstall Trilio

To uninstall Trilio and start fresh, delete all Trilio CRD's.

On Kubernetes

On OpenShift

Coexisting with service mesh

A service mesh assigned to a namespace can cause Trilio containers to fail to exit due to the injection of sidecar containers. Trilio operations, including the creation of targets, backups, and restores, may encounter indefinite execution or failure due to timeout.

By running the following commands, one can determine if a non-Trilio container is running inside the pod.

kubectl get pods -n NAMESPACE

kubectl describe pod PODNAME -n NAMESPACE

Trilio has the ability to coexist with a service mesh if it supports exclusion of sidecar init containers from pods based on labeling. Additionally, Trilio offers optional flags that users can provide as needed.

Edit the Trilio "tvm" CR by running the below command.

The namespace is dependent on the Kubernetes distro and Trilio version.

kubectl edit tvm -n NAMESPACE

Add or edit the section “helmvalues” as shown below for the respective service mesh. Please follow the respective service mesh software’s best practices for assigning the label. Below is an example of linkerd service mesh.

Note : Trilio for Kubernetes automatically performs this exclusion for Istio and Portshift service mesh sidecars; no further action is required. There is an enhancement around this in Kubernetes version 1.27