Getting Started with Trilio on VMware Tanzu Kubernetes Grid (TKG)

Learn to install, license, and test Trilio on VMware Tanzu Kubernetes Grid (TKG)

Table of Contents

What is Trilio for Kubernetes?

Trilio for Kubernetes is a cloud-native backup and restore application. Being a cloud-native application for Kubernetes, all operations are managed with CRDs (Customer Resource Definitions).

Trilio utilizes Control Plane and Data Plane controllers to carry out the backup and restore operations defined by the associated CRDs. When a CRD is created or modified the controller reconciles the definitions to the cluster.

Trilio gives you the power and flexibility to backup your entire cluster or select a specific namespace(s), label, Helm chart, or Operator as the scope for your backup operations.

In this tutorial, we'll show you how to install and test operation of Trilio for Kubernetes on your VMware Tanzu Kubernetes Grid deployment.

Prerequisites

Before installing Trilio for Kubernetes, please review the compatibility matrix to ensure Trilio can function smoothly in your Kubernetes environment.

Trilio for Kubernetes requires a compatible Container Storage Interface (CSI) driver that provides the Snapshot feature.

Check the Kubernetes CSI Developer Documentation to select a driver appropriate for your backend storage solution. See the selected CSI driver's documentation for details on the installation of the driver in your cluster.

Trilio will assume that the selected storage driver is a supported CSI driver when the volumesnapshotclass and storageclassare utilized.

Trilio for Kubernetes requires the following Custom Resource Definitions (CRD) to be installed on your cluster:VolumeSnapshot, VolumeSnapshotContent, and VolumeSnapshotClass.

Installing the Required VolumeSnapshot CRDs

Before attempting to install the VolumeSnapshot CRDs, it is important to confirm that the CRDs are not already present on the system.

To do this, run the following command:

If CRDs are already present, the output should be similar to the output displayed below. The second column displays the version of the CRD installed (v1 in this case). Ensure that it is the correct version required by the CSI driver being used.

Installing CRDs

Be sure to only install v1 version of VolumeSnapshot CRDs

Read the external-snapshotter GitHub project documentation. This is compatible with v1.22+.

Run the following commands to install directly, check the repo for the latest version:

For non-air-gapped environments, the following URLs must be accessed from your Kubernetes cluster.

Access to the S3 endpoint if the backup target happens to be S3

Access to application artifacts registry for image backup/restore

If the Kubernetes cluster's control plane and worker nodes are separated by a firewall, then the firewall must allow traffic on the following port(s)

9443

Verify Prerequisites with the Trilio Preflight Check

Make sure your cluster is ready to Install Trilio for Kubernetes by installing the Preflight Check Plugin and running the Trilio Preflight Check.

Trilio provides a preflight check tool that allows customers to validate their environment for Trilio installation.

The tool generates a report detailing all the requirements and whether they are met or not.

If you encounter any failures, please send the Preflight Check output to your Trilio Professional Services and Solutions Architect so we may assist you in satisfying any missing requirements before proceeding with the installation.

Two options for installation method:

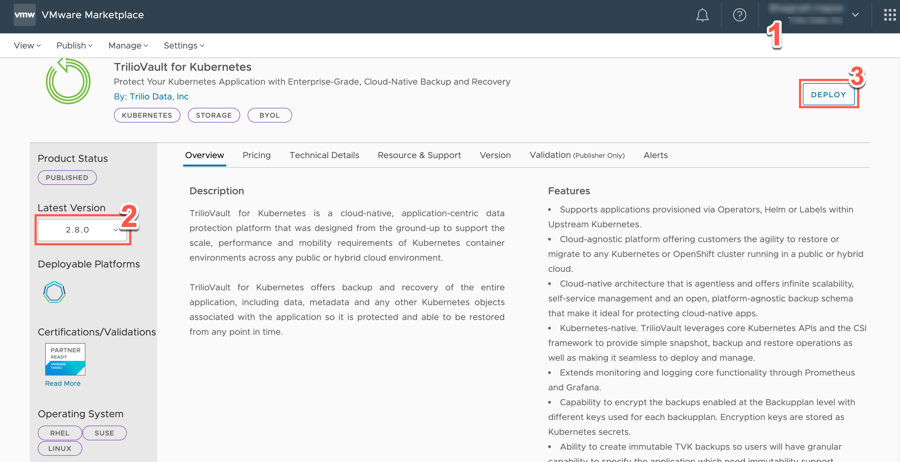

Trilio for Kubernetes is available as a certified Backup and Recovery solution in the VMware Marketplace for VMware Tanzu Kubernetes Grid (TKG) environments, however currently there is no option to install T4K onto the Tanzu clusters directly from the VMware Marketplace.

Use an existing helm repository

Use the standard helm install command as per the upstream Kubernetes method

Helm Installation (Method 1)

Users can download the T4K helm chart .tgz file, add it to their existing helm repository and then install the T4K chart from the same repository. To install using this method, perform the following steps:

Log in to VMware Marketplace.

From the page displayed, in the version dropdown, select the version of T4K that you wish to install. If you are free to choose, it is recommended to use the latest version.

Click Deploy.

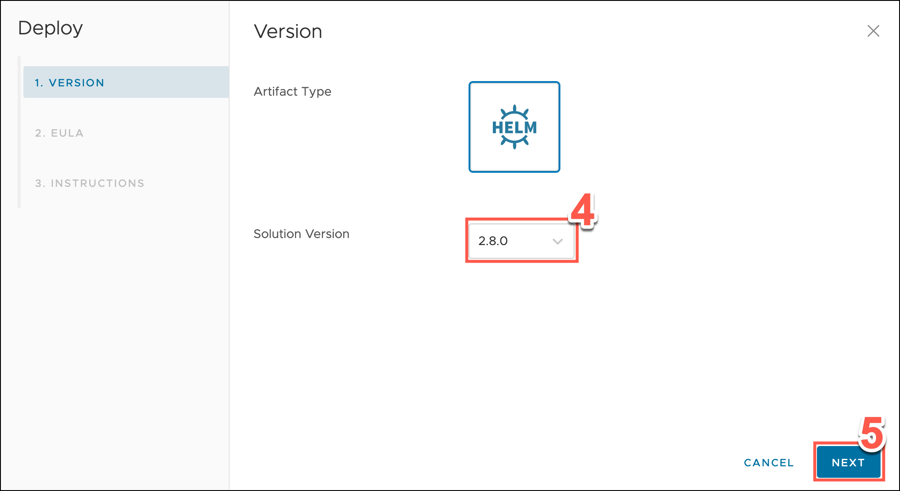

In the Deploy wizard displayed, confirm that the version is correct.

Click Next.

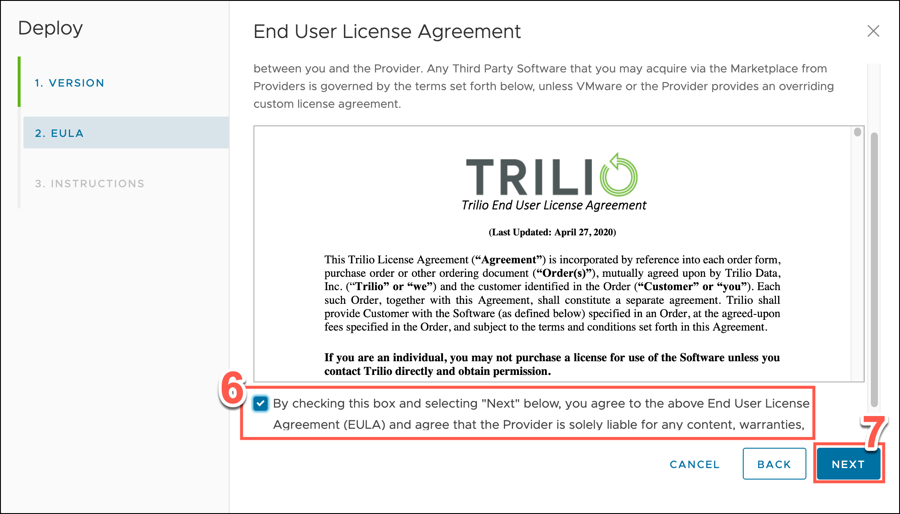

After reading the Trilio End User License Agreement, place a check/tick in the box to indicate your agreement with the terms.

Click Next.

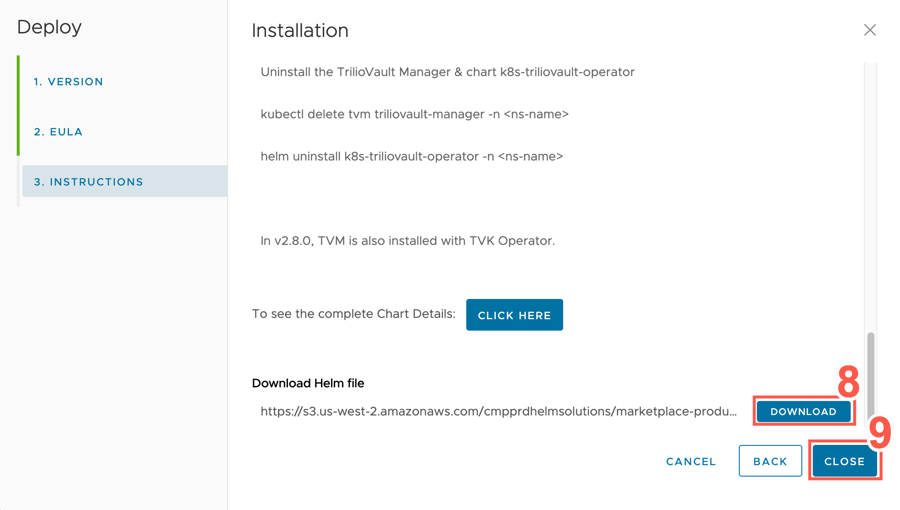

Click Download to download the .tgz file.

Click Close to Exit the Deploy wizard.

Now add the downloaded .tgz helm chart file to your existing helm repository.

Then use the helm install command to pull the image from your existing helm repository.

Perform steps to prepare environment for Trilio installation

Configure Antrea Networking

Set Antrea NetworkPolicyStats totrue. To accomplish this, change the cluster context to management and run the following command:

On the workload cluster, restart the Antrea controller and agent with these commands:

Install VMware CSI Driver

First, disable automatic updates for the CSI driver by changing the kubeconfig context to the management cluster and running the following command:

Next, get a copy of the Vsphere Config secret and save it to a YAML file:

Then, remove the vsphere-csi package on the workload cluster:

If the secret has been removed by the package uninstall, recreate it with:

Download the CSI driver YAML:

If preferred, replace the namespace in this file from vmware-system-csi to kube-system to run the driver in the kube-system namespace.

Apply the driver on the workload cluster:

Enable the volume snapshot feature on the workload cluster:

Download the CSI Snapshot controller install script:

If preferred, replace the namespace in this file from vmware-system-csi to kube-system to run the driver in the kube-system namespace.

Run the script on the workload cluster:

After you have installed the CSI driver, a snapshot class must be created.

Install Trilio using Helm Charts

Start by creating a new namespace on the workload cluster for Trilio, and switch to that new namespace. Run the following command to add Trilio's helm repository and install the Trilio Vault Operator:

Upstream Kubernetes Installation (Method 2)

Install the VolumeSnapshot CRDs onto the TKG cluster before performing the T4K installation, by following the steps outlined in the prerequisite section for the Installation of VolumeSnapshot CRDs. VolumeSnapshot CRDs are not shipped with the TKG cluster by default, so users must install them onto the TKG cluster before performing the T4K installation. Failure to perform this step first may cause one or more pods not to run successfully.

The installation of Trilio for Kubernetes on the TKGm (Tanzu Kubernetes Grid for Multi-cloud) or TKGs (Tanzu Kubernetes Grid Service for vSphere) is the same as Upstream Kubernetes, so follow the upstream Helm Quickstart Installation guide.

Users must follow the instructions to install the VMware vSphere CSI Driver from the official documentation. T4K requires a CSI driver with volume snapshot capability to enable it to perform the backup operation. The VMware vSphere CSI Driver with v2.5 and above supports the volume snapshot capability.

Potential Issues and Workarounds

Operator Pod Not Starting

There may be an initial failure during the operator installation due to the Operator pod not starting as the ReplicaSet (RS) couldn't be created. This may be due to an issue with the Pod Security Policy (PSP).

Please define a ClusterRole as shown below to resolve this issue:

Antrea Feature Gates

Following the resolution of the PSP issue, it was necessary to enable NetworkPolicyStats from the antrea-agent configuration.

Here are the necessary steps:

Edit the

configmap antrea-config-c2g88k9fbhin thekube-systemnamespace using the following command:

Within the

antrea-controller.conf, setantrea.config.featureGates.NetworkPolicyStats = true.Also within

antrea-controller.conf, setantrea.config.featureGates.AntreaPolicy = true.

Please refer to the attachments for more information.

Afterwards, run the following commands to restart deployment and daemonset:

Trilio Manager Service Type

Depending upon the network architecture of your cluster, you may need to change the TVM service.type to LoadBalancer in order to obtain the EXTERNAL-IP for the ingress service, which will then allow you to access the TVK user interface.

Access Trilio UI

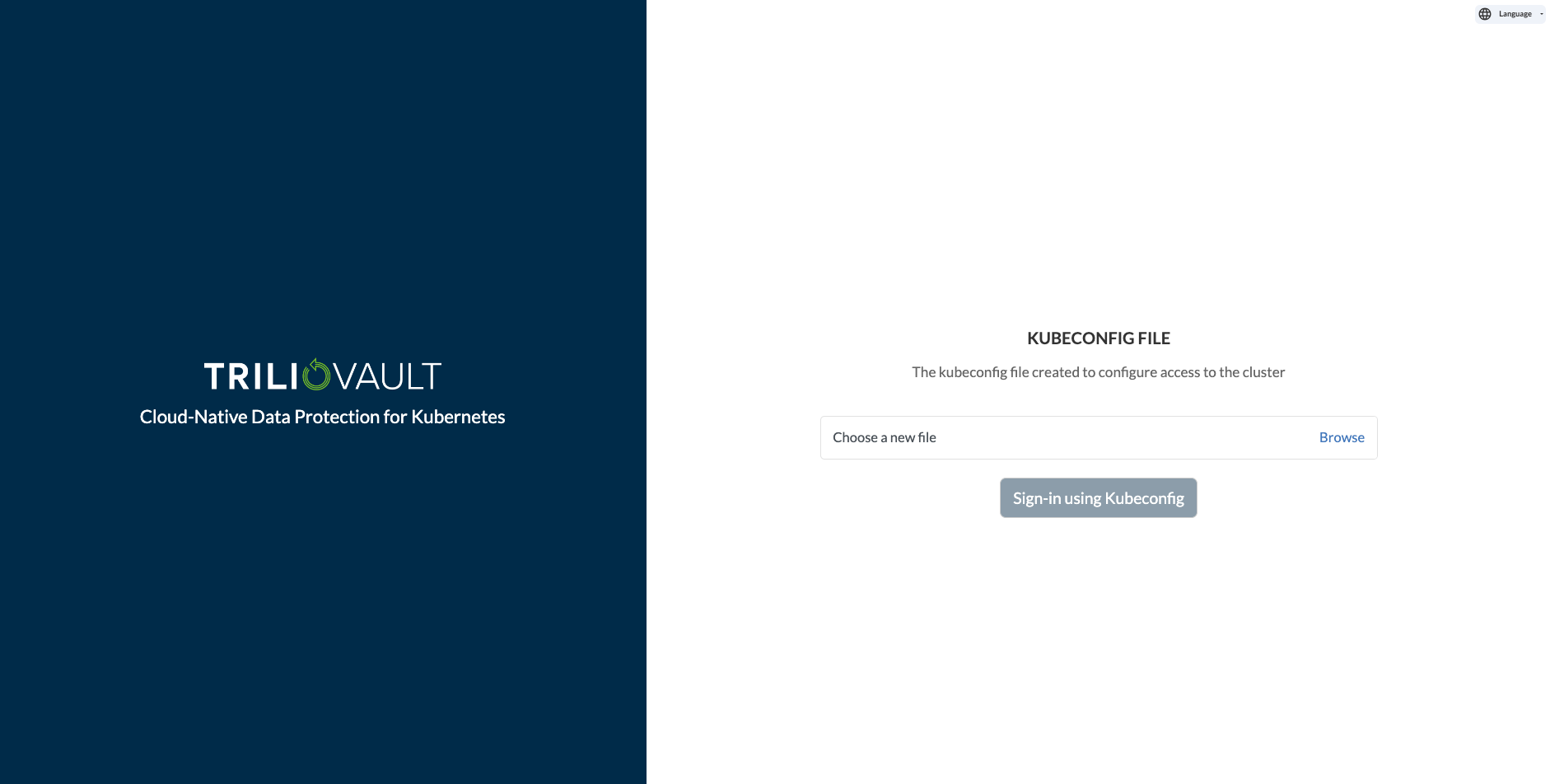

Independent of which install method you employ, when the installation is complete and all pods are up and running, you can access the Trilio web UI by pointing your browser to the configured Node Port.

Licensing Trilio for Kubernetes

To generate and apply the Trilio license, perform the following steps:

Though a cluster license enables Trilio features across all namespaces in a cluster, the license should only be created in the Trilio install namespace.

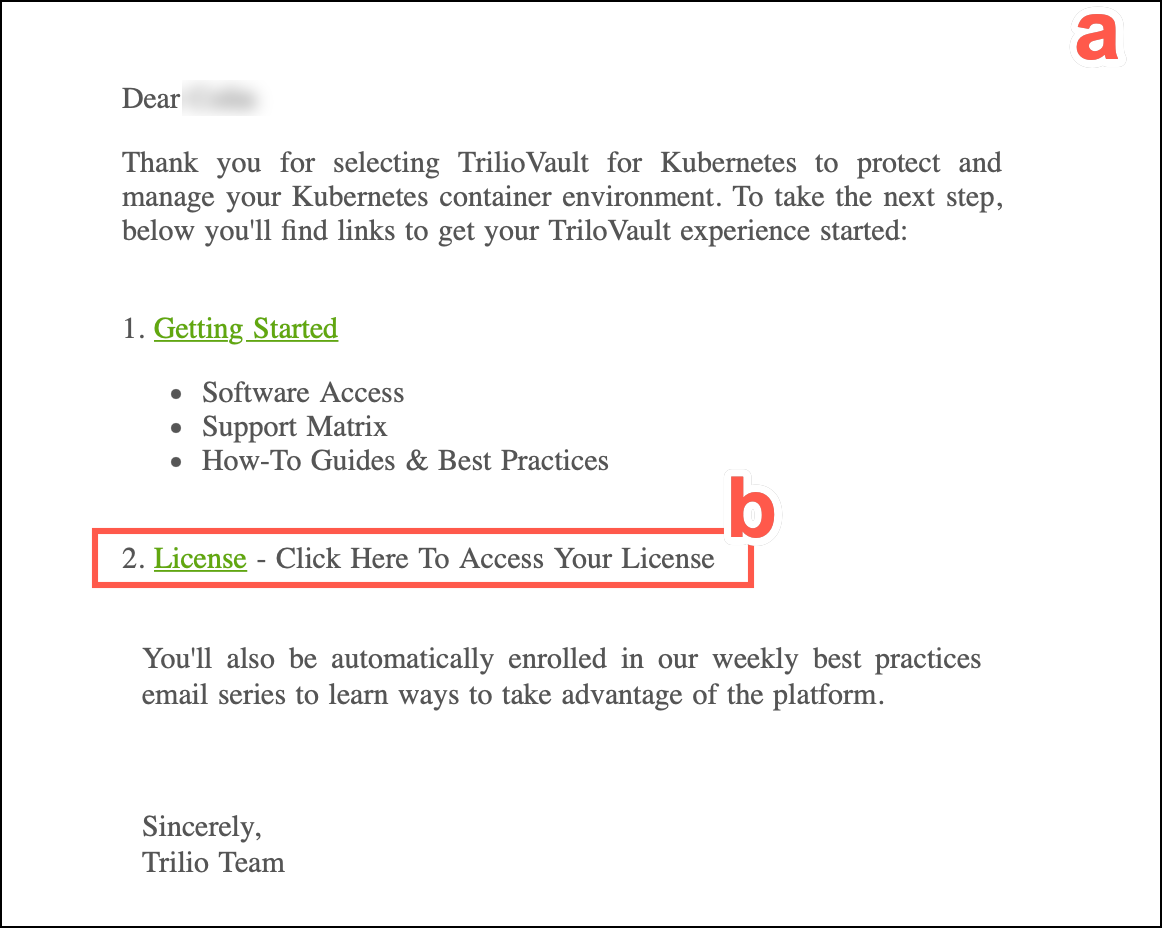

1. A license file must be generated for your specific environment.

a) Navigate to your Trilio Welcome email.

b) Click on the License link.

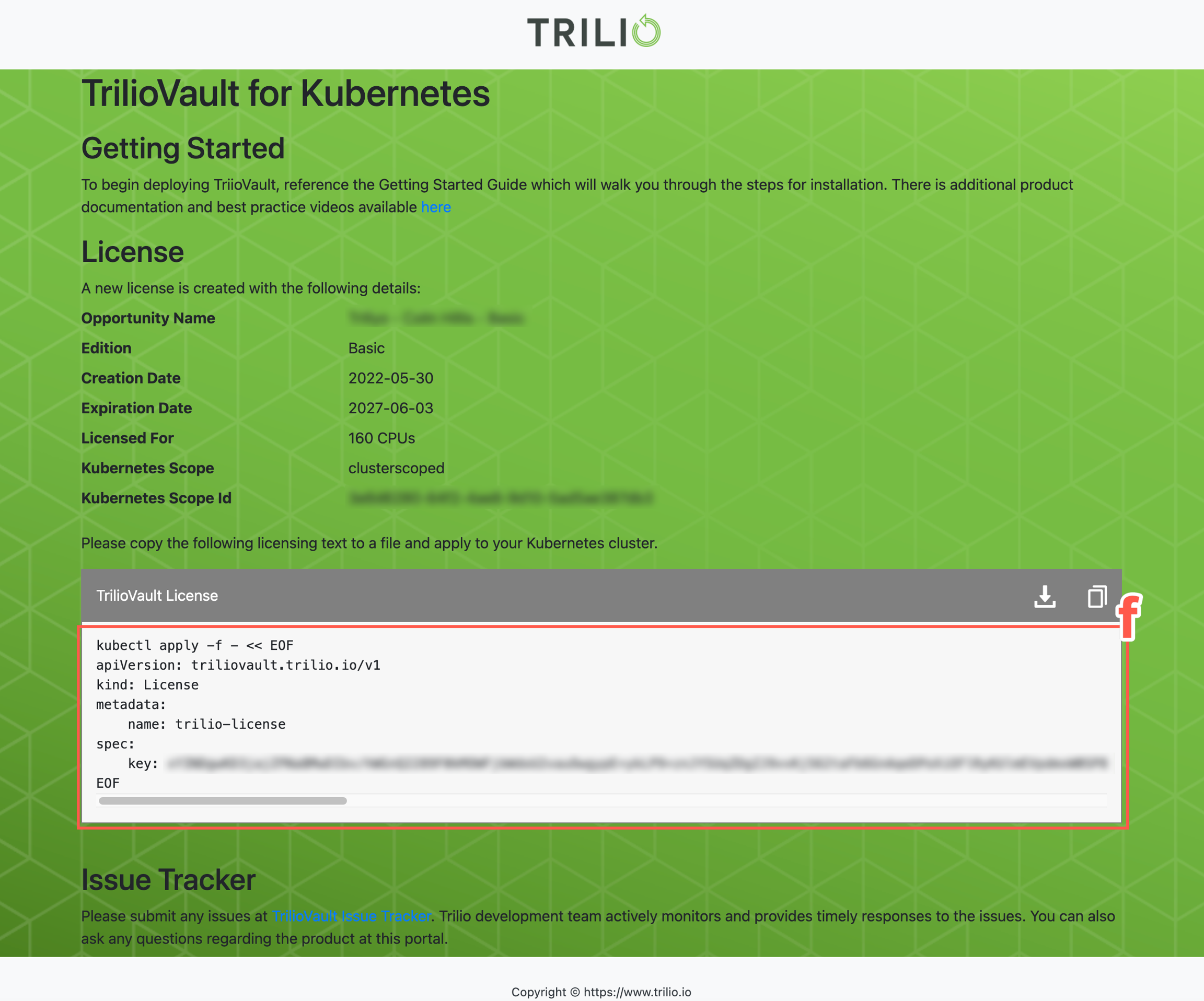

c) On the Trilio for Kubernetes License page, click Generate License.

d) On the details confirmation page, copy or download the highlighted text to a file.

You can use the download button to save the highlighted text as a local file or use the copy button to copy the text and create your file manually.

2. Once the license file has been created, apply it to a Trilio instance using the command line or UI:

Execute the following command:

2. If the previous step is successful, check that output generated is similar to the following:

Additional license details can be obtained using the following:

kubectl get license -o json -m trilio-system

Prerequisites:

Authenticate access to the Management Console (UI). Refer to .

Configure access to the Management Console (UI). Refer to Configuring the UI.

If you have already executed the above prerequisites, then refer to the guide for applying a license in the UI: Actions: License Update

Upgrading a license

A license upgrade is required when moving from one license type to another.

Trilio maintains only one instance of a license for every installation of Trilio for Kubernetes.

To upgrade a license, run kubectl apply -f <licensefile> -n <install-namespace> against a new license file to activate it. The previous license will be replaced automatically.

Troubleshooting

Problems? Learn about Troubleshooting Trilio for Kubernetes