S3 as Backup Target

This article describes the approach that Trilio has taken to support S3 compatible storage as backup target

Introduction

Since the introduction of Amazon S3, object storage has become the storage of choice for many cloud platforms. Object storage offers very reliable, infinitely scalable storage by utilizing cheap hardware. Object storage has become the storage of choice for archival, backup and disaster recovery, web hosting, documentation, etc.

Impedance mismatch between Object Storage and Trilio backup images

Unlike NFS or block storage, object storage does not support file system POSIX APIs for accessing objects. Objects need to be accessed in its entirety. Either the object should be read as a whole or modified as a whole.

For Trilio to implement all its features such as retention policy, forever incremental, snapshot mount and one click restoreit needs to layer POSIX file semantics for objects.

Usually backup images tend to be large so if each backup image is created as one object then manipulating backup image requires downloading the entire object and uploading the modified object back to object store. These types of access requires enormous amount of local disk and network bandwidth and does not scale for large deployments.

Simple operation such as snapshot mount operation or PV restore operation may require accessing the entire chain of overlay files. Accessing latest captured point-in-time using the appropriate overlay file is easy with NFS types of storage, however for object store it requires to you download all overlay files in the chain and then mapping the top of overlay file as a virtual disk to file manager.

FUSE Plug in for S3 bucket

Filesystem in Userspace (FUSE) is a software interface for Unix and Unix-like computer operating systems that lets non-privileged users create their own file systems without editing kernel code. Any data source can be exposed as a Linux file system using a FUSE plugin.

There are number of implementations of S3 FUSE plugins in the market. All of these implementations download the entire object to the local disk to provide read/write functionality. These implementations do not scale for large backup images.

The Trilio FUSE plugin implementation for S3 bucket is inspired by the inode structure of the Linux file system. Each inode structure has essential information of the file including creation time, modified time, access time, size, permissions and list of data blocks.

Reading and writing files happen at block level. Let's say if an application were to read/write a file at offset 1056 of length 24 bytes and the block size of the file system is set to 1024, file system reads the second block and either reads or modifies the data at offset 22 byte in the block.

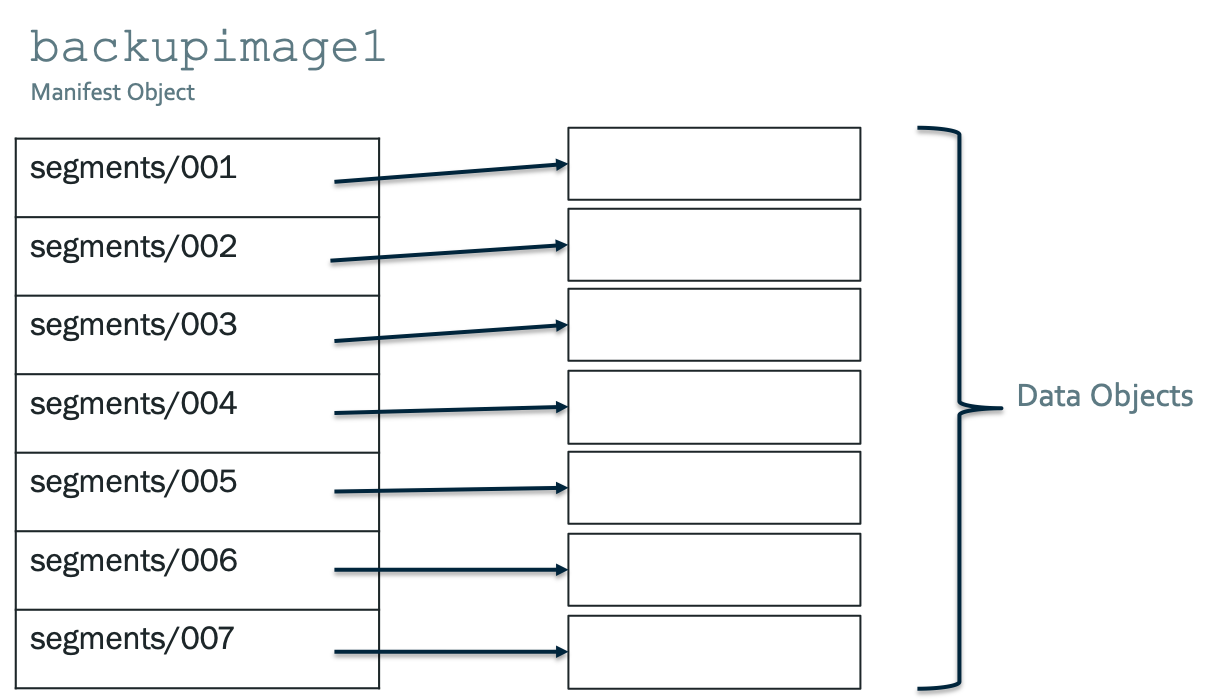

The Trilio FUSE plugin for S3 has a similar construct. For each file that application creates using POSIX API, the FUSE plugin creates a manifest object which is very similar to inode structure. The manifest object metadata fields hold file attributes such as creation time, access time, modified time, size, etc. The contents of the manifest reflects the list of paths to data objects. Each data object is similar to data block in a Linux file system but each data object is much larger in size. The default value is set to 32 MB and is not configurable. The FUSE plugin implements read/write operations on the file very similar to Linux file system. It calculates the data object index that corresponds to read/write offset, downloads the object from object store and then reads/writes the data in the data block. If the operation is a write operation it then uploads the data object back to object store.

The Trilio FUSE plugin breaks a large file into fixed size data blocks, it can scale well irrespective of the file size. It delivers the same performance with 1 TB file or with 1 GB file. Since it only downloads/uploads data blocks, it does not require any storage from local disk.

In the above example, backupimage1 is around 224 MB, which is split into 7 data objects of 32 MB. Read/write implementation of fuse plugin calculates the data object based on the read/write offset and fetches the corresponding data object from object store. It reads or modifies the data by length at offset adjusted to data object and uploads the data object into object store if modified.

To improve read/write performance, the FUSE plugin caches 5 recently used data objects per file in memory so the datamover must have enough RAM to accommodate 160MB for cached datablocks.